Active Directory User Migration in Hybrid Exchange Environment Using ADMT – Part6

Security Translation – Local Profiles and things to consider for end user experience

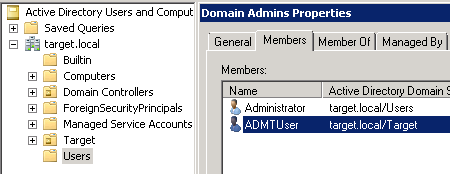

The last bit of any migration project is to keep the end user experience as simple and smooth as possible. So, by now we have successfully migrated the groups, migrated the users keeping their mailboxes intact and providing them access to all their resources using SID history. As the last bit of the migration I would like to discuss about few things that should be considered from an end user’s perspective to make their experience good when they login to the new domain.… [Keep reading] “Active Directory User Migration in Hybrid Exchange Environment Using ADMT – Part6”