Cloud Operations Model and Project Stream – Considerations

Background

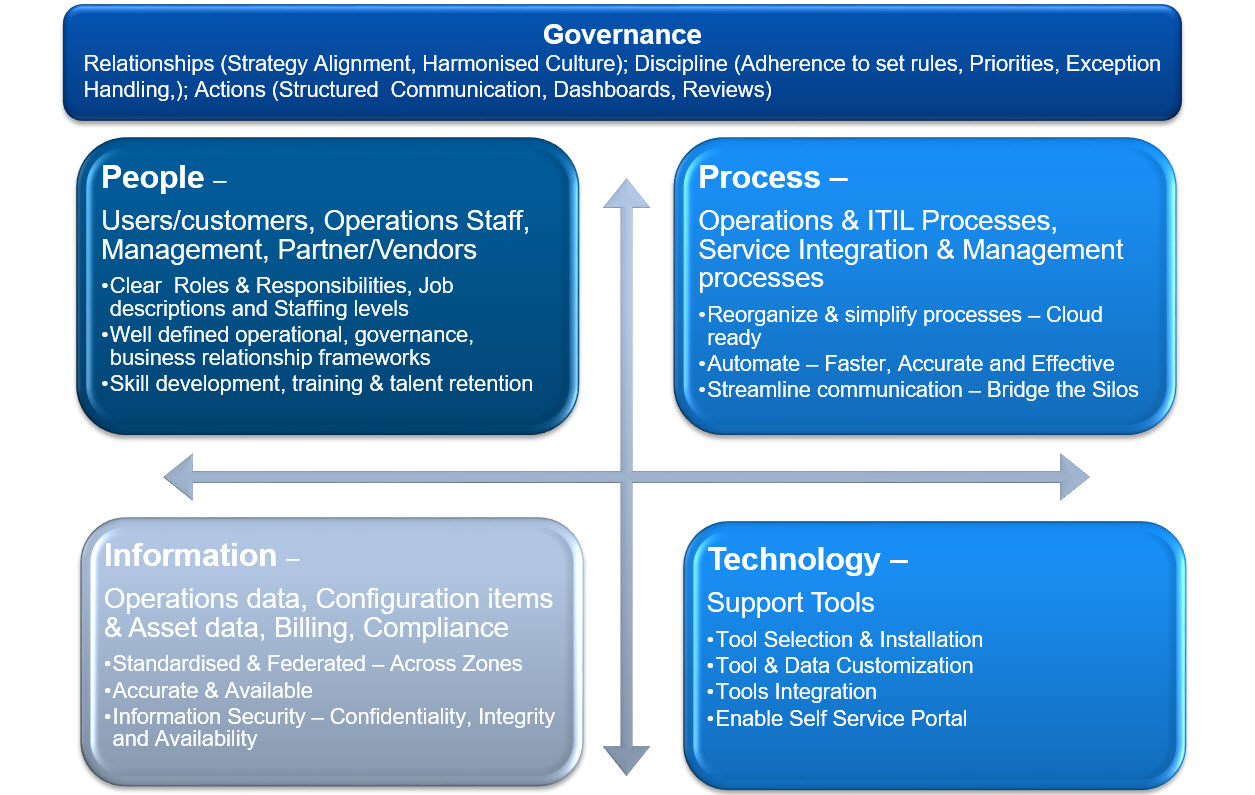

Cloud operations stream is responsible for designing and operation of the cloud model for the project and BAU activities. This stream is primarily responsible for people, process, tools and information. The model can change as the organisation’s requirements and type of business.

Aspects – Cloud Operations Model

Below is an example of key aspects that we need to consider when defining Cloud Operations Model.

Cloud Operations Stream – High Level Approach

Below is an example model for how to track a cloud program operationally.… [Keep reading] “Cloud Operations Model and Project Stream – Considerations”