Part 2 of 4 series into Teams Controlled Robotics

Part 1 https://blog.kloud.com.au/2019/03/06/intelligent-man-to-machine-collaboration-with-microsoft-teams-robo-raptor/

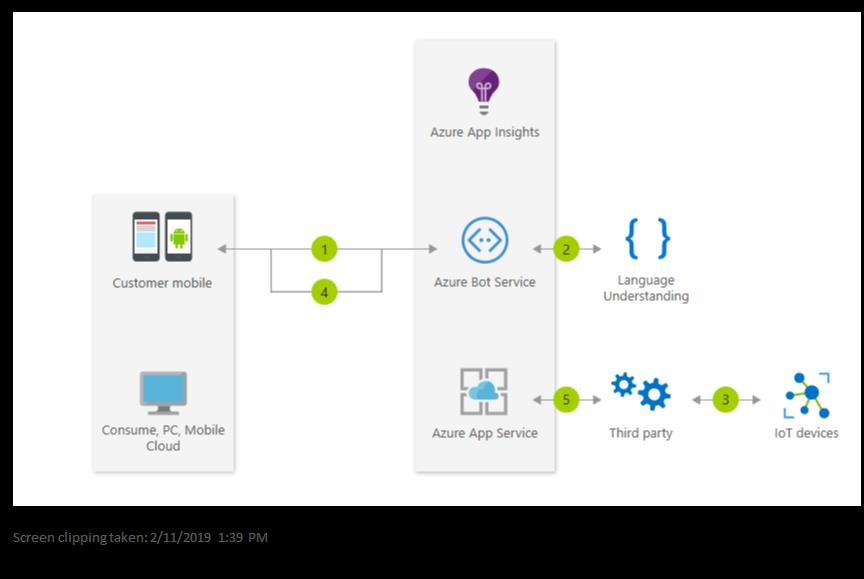

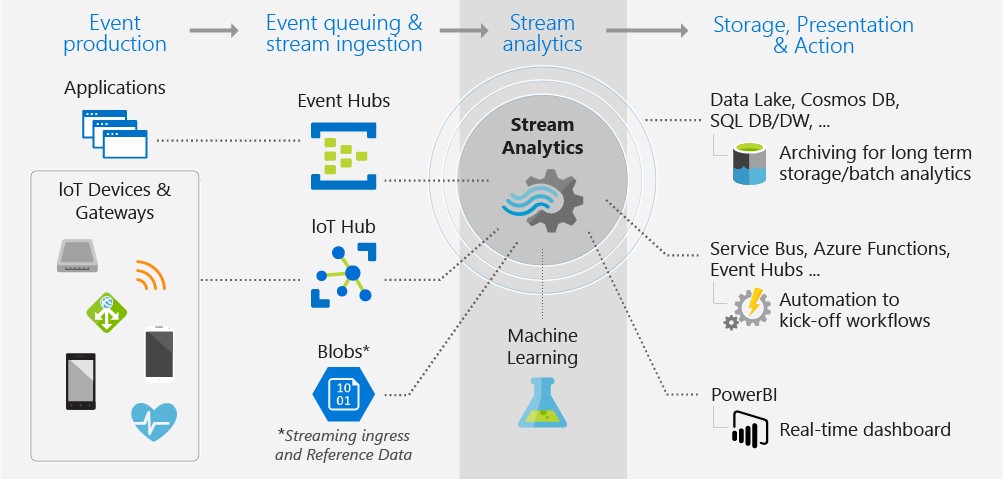

Microsoft Teams is an excellent collaboration tool with person to person communication workloads like, Messaging, Voice and Video collaboration. Microsoft Teams can also use Microsoft AI and cognitive services to collaborate with machines and devices. The Azure suite of services allows person to machine control, remote diagnostics and telemetrics analytics of internet connected devices.

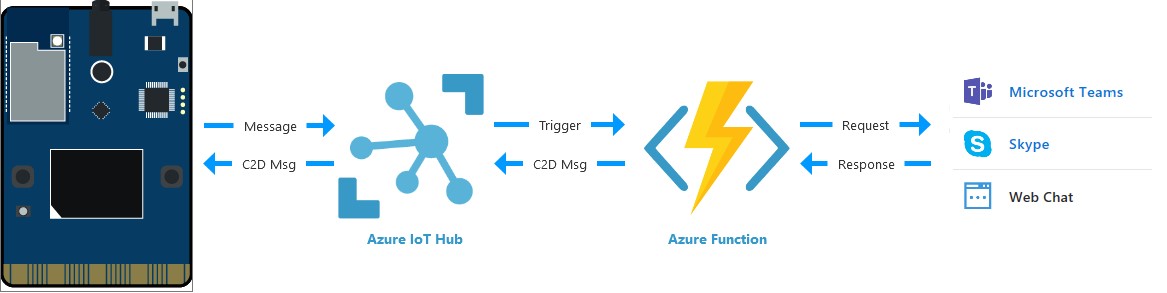

To demonstrate how Microsoft Teams can control remote robotics, I have created a fun project that allows Teams to manage a RoboRaptor through Teams natural language messages. The objective is to send control commands from Teams as natural language messages that are sent to a Microsoft AI BOT. The BOT will then use Azure language understanding services (LUIS) to determine the command intent. The result is sent to the Internet of Things controller card attached to the RoboRaptor for translation into machine commands. Finally I have configured a Teams channel to the Azure BOT service. In Teams it looks like a contact with an application ID. When I type messages into the Teams client it is sent from Teams over the channel to the Azure BOT service for processing. The RoboRaptor command messages to the IoT device are sent from the BOT or functions to the Azure IoT HUB service for messaging to the physical device.

The overview of the logical connectivity is below:

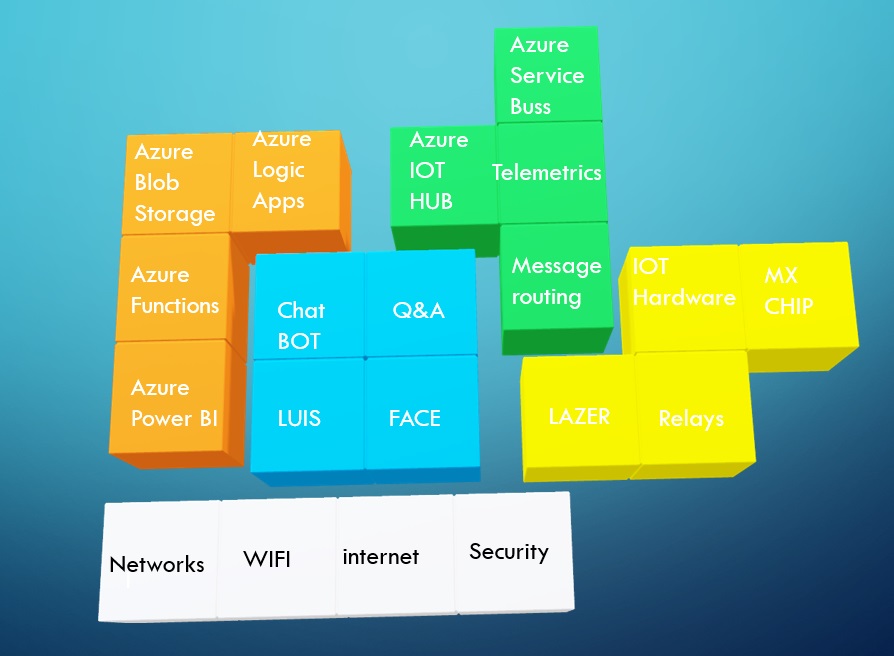

The Azure services and infrastructure used in this IoT environment is extensive and fall into five key areas.

x

x

- The blue services belong to Azure AI and machine learning services and it includes chat bots and cognitive services.

- The services in orange belong to Azure compute, analytics.

- The services in green belong to Azure internet of things suite.

- The Services in yellow are IoT hardware, switches and sensors.

- The services in white are network connectivity infrastructure

The Azure Bot service plays an essential part in the artificial intelligence and personal assistant role by calling and controlling functions and cognitive services. As the developer, I create code that collects instant messages from web chats and Teams channels and tries to collect key information and then determines an intent of the user.

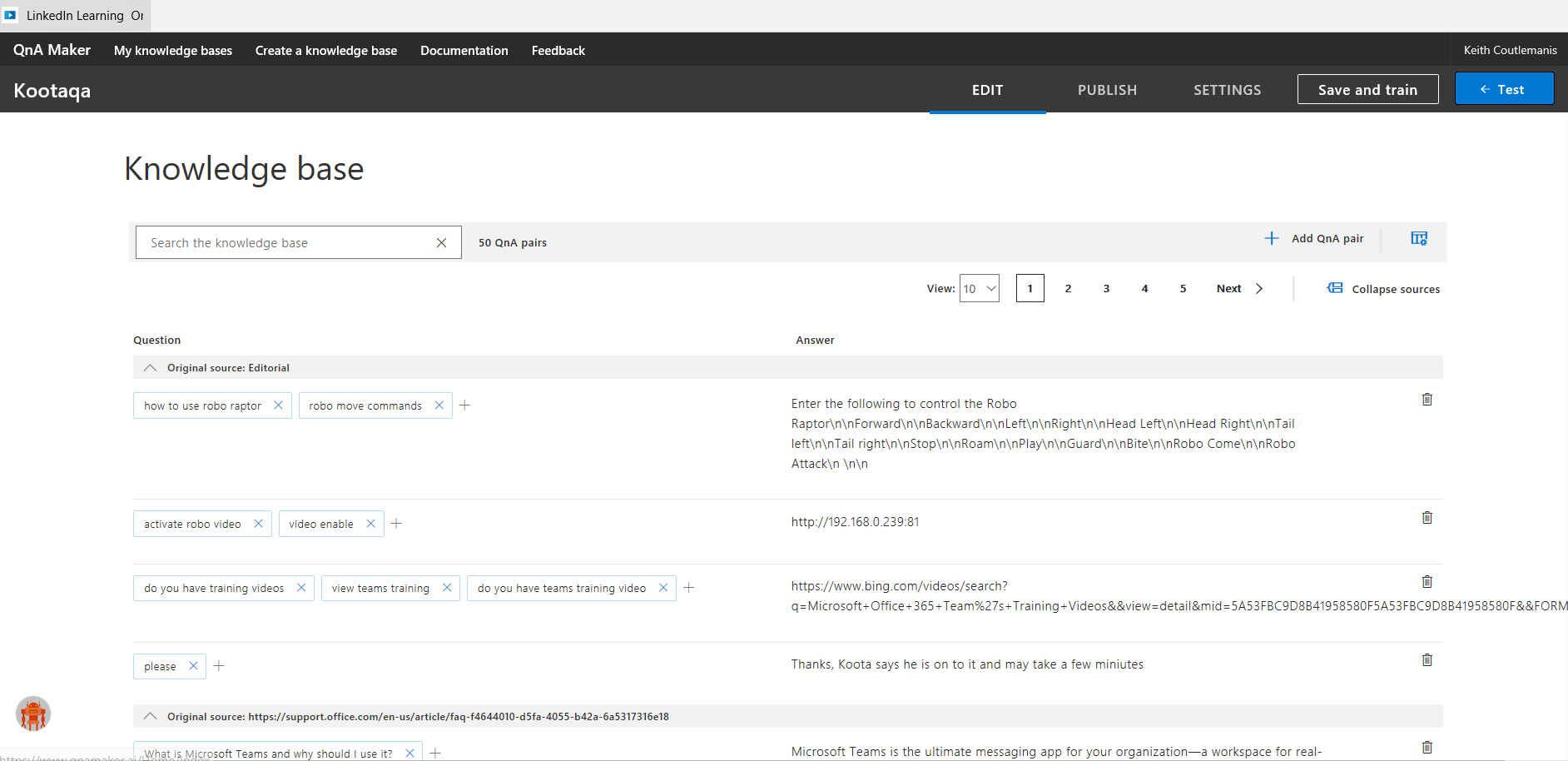

Question and Answer Service:

In this project I want to be able to deliver a help menu. When users type in a request for help with the commands that they can use with the RoboRaptor, I wish to be able to return a list in a Teams card of all commands and possible resultant actions. The Azure Q&A service is best suited for this task. The Q&A service is an excellent repository for a single question and a single reply with no processing. With the Q&A service you build a list of sample questions, and if you match you reply with the assigned text, it is best for Frequently asked Questions scenarios.

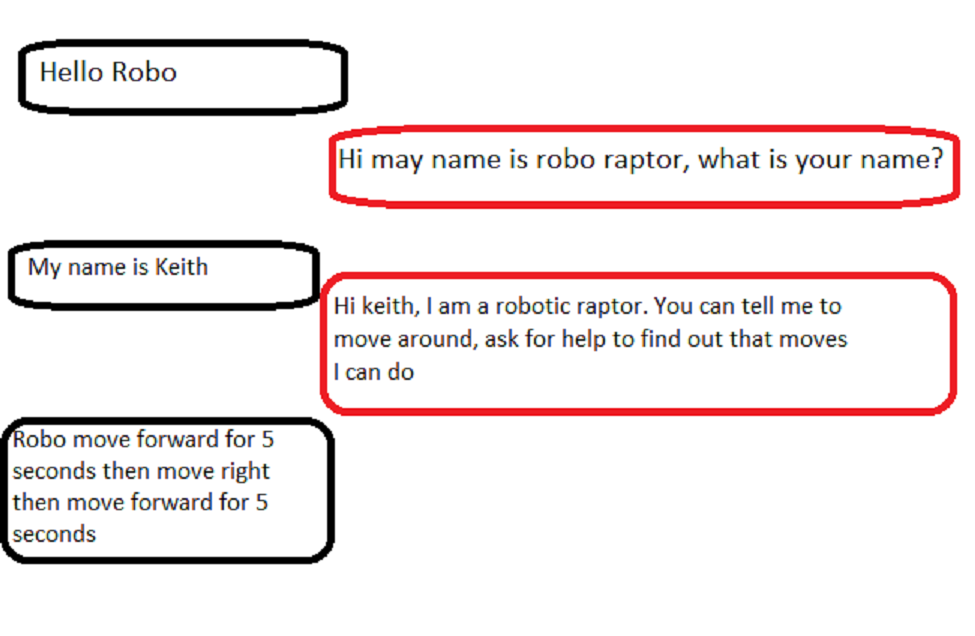

I can use the BOT to collect information from the user and store it in dialog tables for processing. For example, I can ask for a user’s name and store it for replies and future use.

Sending commands

I wanted to be able to use natural language to forward commands to the RoboRaptor. As Teams is a collaboration tool and for those who are part of the team have permissions for this BOT, so they too can send commands to IoT robotic devices. The Teams members can have many ways of saying a request. Sure, I can just assign a single word for an action like forward however if I want to string commands together I will need to use the Azure LUIS service BOT arrays to build action table. For example, I can build a BOT that replicates talking to a human through the teams chat window.

As you can see the LUIS service can generate a more natural conversation with robotics.

How I use the Luis service?:

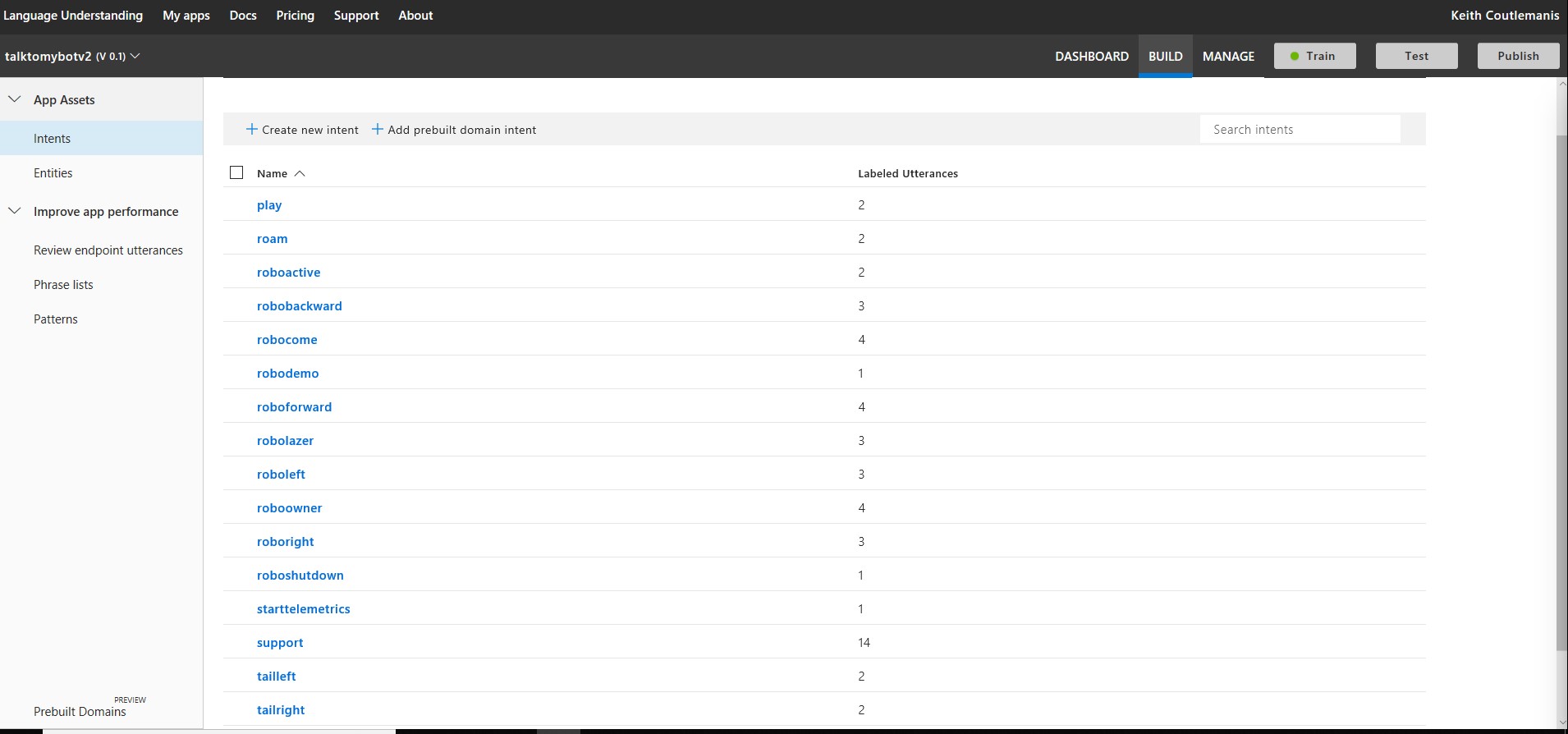

The LUIS service is a repository and collection of intents and key phrases. The diagram below shows an entry I have created to determine the intent of a user requirement and check its intent confidence level.

I have a large list of intents that equates to a RoboRaptor command request, like move forward and Stop and it includes intents for other projects like collecting names and phone numbers, it can also contain all my home automation commands too.

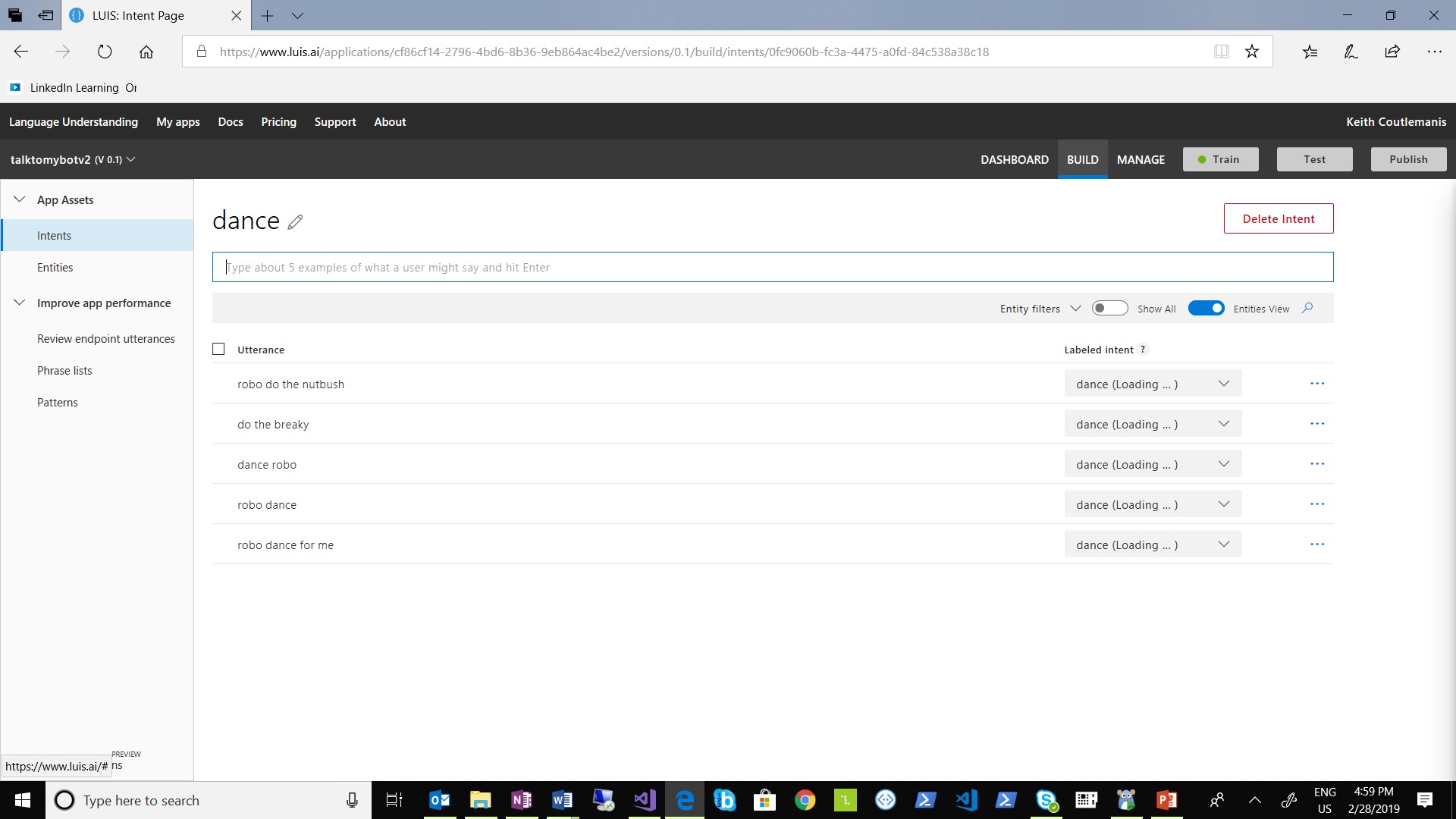

In the example below, I have the intent that I want the RoboRaptor to dance. Under the dance intent I have several ways of asking the RoboRaptor to dance.

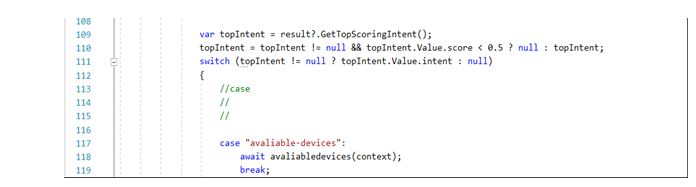

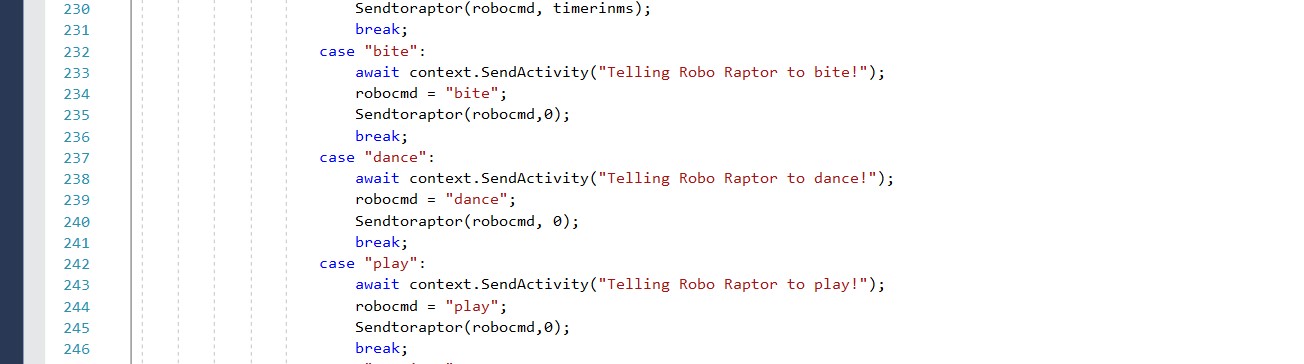

The LUIS service will return to the BOT the Intent of dance and a score of how confident it is of a match. The following is BOT code that evaluates the returned intent and score. If the confidence score is above 0.5 the BOT will initiate a process based on a case match. I created basic Azure BOT service from Visual Studio 2017. You can start with the Hello world template and then build dialogue boxes and middleware to other azure services like Q&A maker and the Luis service.

In our case the intent is dance so the Sendtoraptor process is called with the command string dance.

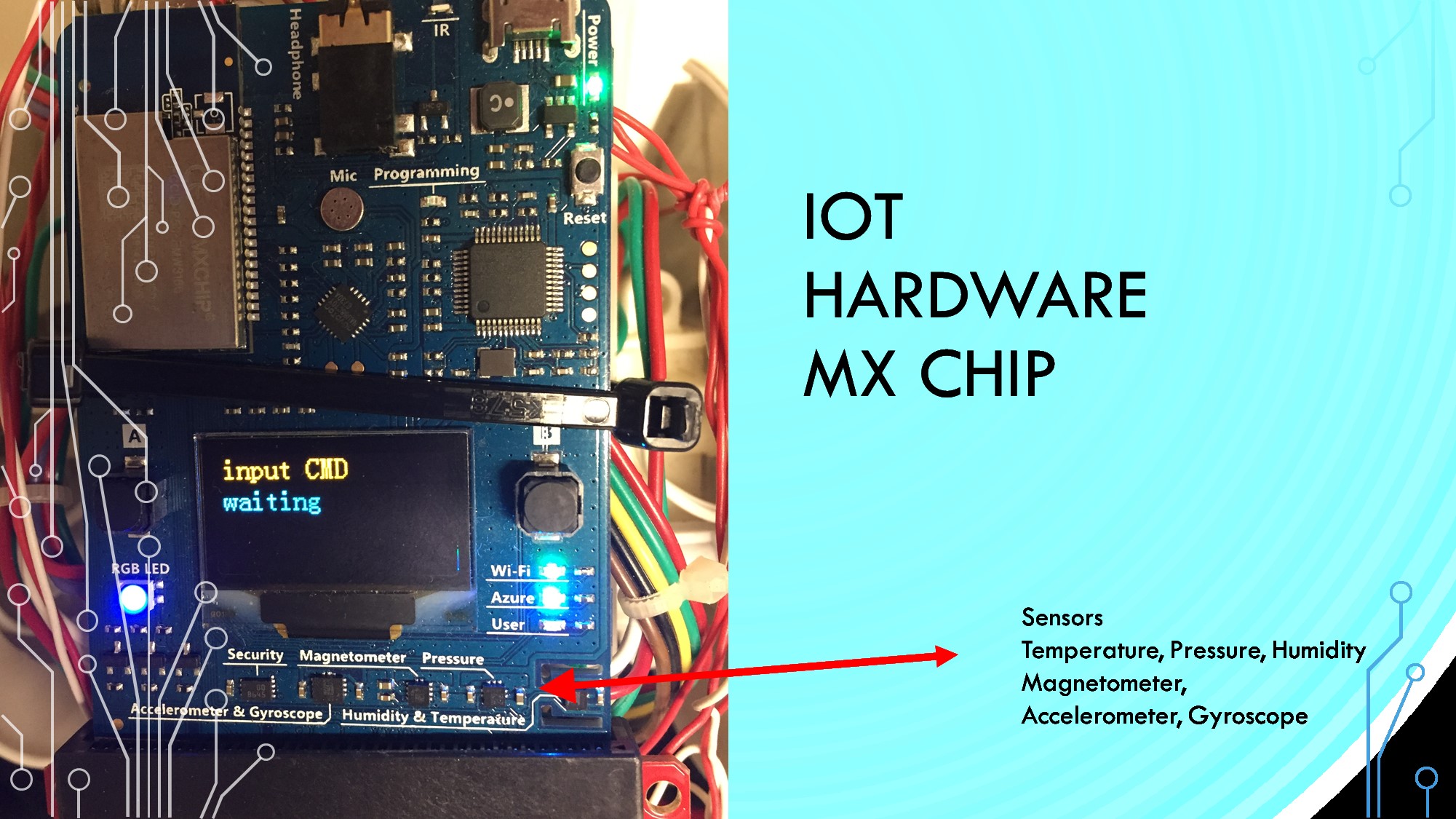

A series of direct method commands to the IoT using the direct call method is invoked. The method name= forward, and a message payload “dance fwd” is sent to the IoT-Hub service and IoT device name of “IOT3166keith” which is my registered MXCHIP. A series of other moves are sent to give the impression that the RoboRaptor is dancing.

|

In the above code the method Invocation API attributes are configured, The new cloudToDeviceMethod(“forward”) sets up a direct call- Cloud to Device method with a methodname = forward and the setPayloadJson configurs a json payload message “dance fwd”.

The await serviceClient.InvokeDeviceMethodAsync (“IOT3166keith”, methodInvocation); function initiate the asynchronous transmission of the message to the IoT Hub service and the device IOT3166keith.

The IOTHUB then sends the message to the physical device. The onboard oled display will show commands as they are received.

Telemetrics

The MXCHIP has many environment sensors built in. I selected Temperature and Humidity as data I wish to send to Power BI for analytics. Every few seconds the Telemetric information is sent to the IoT hub service.

I have configured message routing for Telemetric messages to get to stream Analytics service in the IOT HUB service. I then parse the json files and save the data in Azure Blob storage, where Power BI can generate reports. More on this with next blog.

The next Blog will discover more about the IOT hardware and IOT HUB service.

keith