Azure VNets and 172.16.0.0/12

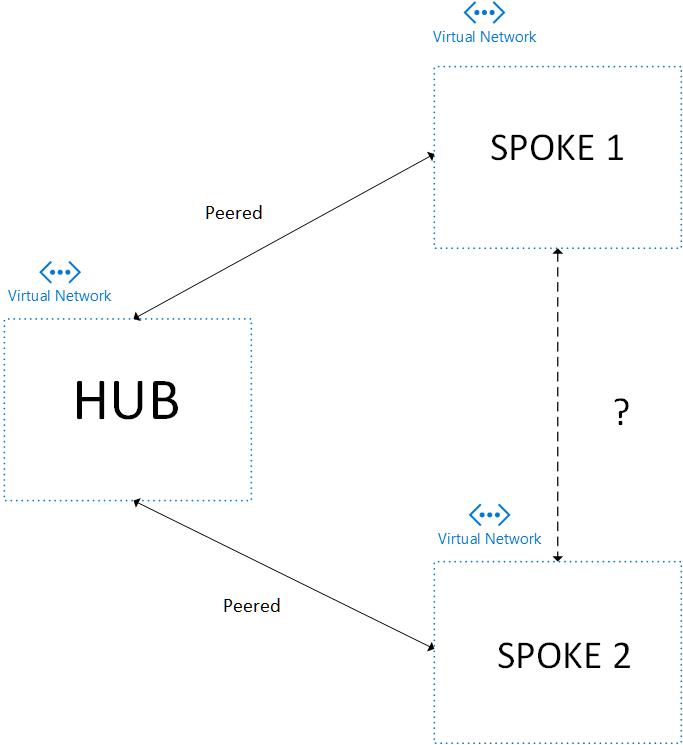

I’ve recently been digging into the weeds of doing an Azure VNet Hub and Spoke design for a customer and it’s brought about revisiting a topic from a while back.

For some quick context- for any given VNet in Azure there is a System RouteTable that holds basic routing information for that VNets network traffic flows within that VNet as well as inbound and outbound of the VNet. The following table outlines what the default System RouteTable routes consist of (table information source):