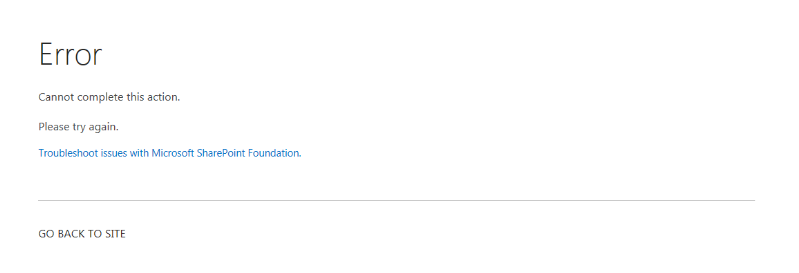

"Cannot complete this action" error in Sharepoint team site

Lately I was assigned a long standing issue in a well known organisation with Sharepoint 2013 on premise, in which some of its users have been getting this weird “Cannot complete this action” error screen whenever they delete a document from a file or modify a list view in their team sites.

Lots and lots of testings were done throughout a few days and I came out with the following an analysis summary:

- Issue exists in some sub-sites of a team site under a team site collection (Sharepoint 2013 on-premise)

- Error occurring consistently for team site users (including site collection admin), changes did get actioned/ saved

- Users have to click back to get back to the previous screen to get back to the site

- Error didn’t occur for some other team sites

- No specific error correlation ID, nor anything suspicious in ULS log

Luckily i was able to find an answer from Microsoft.… [Keep reading] “"Cannot complete this action" error in Sharepoint team site”