Remote Access to Local ASP.NET Core Applications from Mobile Devices

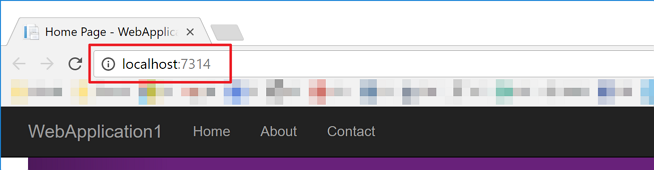

One of the most popular tools for ASP.NET or ASP.NET Core application development is IIS Express. We can’t deny it. Unless we need specific requirements, IIS Express is a sort of de-facto web server for debugging on developers’ local machines. With IIS Express, we can easily access to our local web applications with no problem during the debugging time.

There are, however, always cases that we need to access to our locally running website from another web browsers like mobile devices.… [Keep reading] “Remote Access to Local ASP.NET Core Applications from Mobile Devices”