There are a few ways to access camera on mobile devices during application development. In our previous post, we used the getUserMedia API for camera access. Unfortunately, as of this writing, not all browsers support this API, so we should provide a fallback approach. On the other hand, HTML5 Media Capture API is backed by almost of all modern browsers, which we can utilise with ease. In this post, we’re going to use Vue.js, TypeScript and ASP.NET Core to build an SPA that performs OCR using Azure Cognitive Service and HTML Media Capture API.

The sample code used in this post can be found here.

Vision API – Azure Cognitive Services

Cognitive Services is an intelligence service provided by Azure and uses machine learning resources. Vision API is a part of Cognitive Services to analyse pictures and videos. It performs analysis of expressions, ages and so on from someone or something in pictures or videos. It even extracts texts from them, which is OCR feature. Previously it was known as Project Oxford, while it was renamed to Cognitive Services when it came out for public preview. Therefore, the NuGet package still has its title of ProjectOxford.

HTML Media Capture API

Media Capture API is one of HTML5 features. It enables us to access to camera or microphone on our mobile devices. According to the W3 document, this is just an extension of the existing input tag with the type="file" attribute. Hence, by adding both accept="image/*" and capture="camera" attributes to the input tag, we can use the Media Capture API straight away on our mobile devices.

Of course, this doesn’t interrupt on existing user experiences for desktop browsers. In fact, this link confirms how the Media Capture API works on either desktop or mobile browsers.

ASP.NET Core Web API

The image file passed from the front-end side is handled by the IFormFile interface in ASP.NET Core.

Well, theory is enough. Let’s make it!

Prerequisites

- ASP.NET Core application from the previous post

- Computer, tablet or smart phone having camera

Implementing Vue Component – Ocr.vue

First of all, we need a Vue component for OCR. This component is as simple as to have an input element, a button element, an img element, and a textarea element.

If we put the ref attribute on each element above, the Vue component can directly handle it. The button element binds the onclick event with the event handler, getText. Ocr.ts contains the actual logic to pass image data to the back-end server.

Like this previous post, in order to use dependency injection (DI), we create a Symbols instance and use it. axios is injected from the top-most component, App.vue, which will be touched later in this post.

We also create a FormData instance to pass the image file extracted from the input element, through an AJAX request. This image data then will be analysed by Azure Cognitive Services.

Updating Vue Component – Hello.vue

Ocr.vue is now combined with Hello.vue as a child component.

Dependency Injection – App.vue

The axios instance is provided at the top-most component, App.vue, which is consumed its child components. Let’s see how it’s implemented.

We use the symbol instance as a key and provide it as a dependency.

Everything on the front-end side is done. Let’s move onto the back-end side.

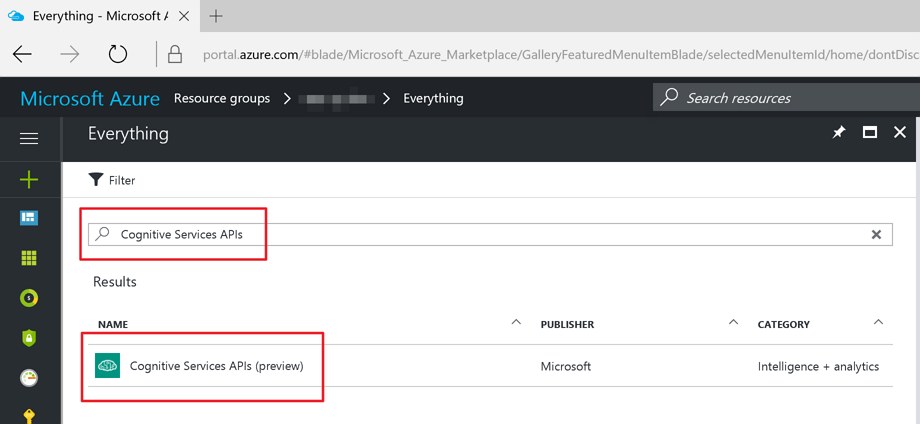

Subscribing Azure Cognitive Service

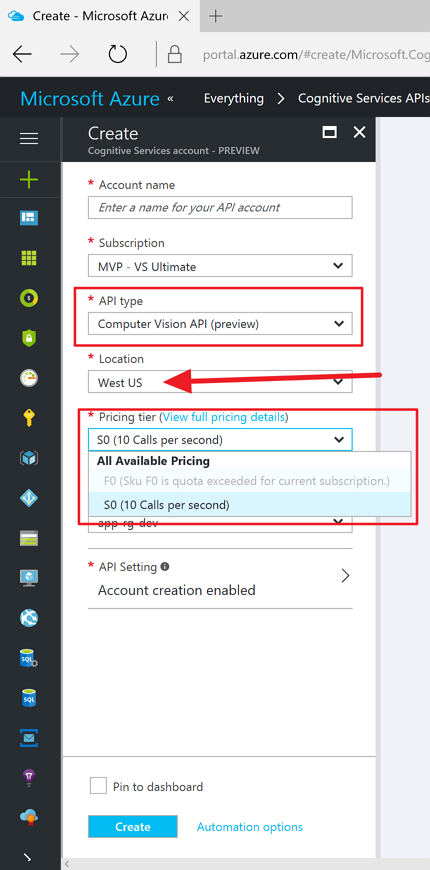

We need to firstly subscribe Azure Cognitive Service. This can be done through Azure Portal like:

At the time of this writing, Azure Cognitive Services are in public preview, which we only can choose the West US region. Choose Computer Vision API (preview) for API Type and F0 (free) for Pricing Tier. Make sure that we only can have ONE F0 tier in ONE subscription for ONE API type.

It takes about 10 minutes to activate the subscription key. In the meantime, let’s develop the actual logic.

Developing Web API – ProjectOxford Vision API

This is relatively easy. Just use the HttpClient class to directly call REST API. Alternatively, ProjectOxford – Vision API NuGet package even makes our lives easier to call the Vision API. Here’s the sample code.

The IFormFile instance takes the data passed from the front-end through the FormData instance. For some reason, if the IFormFile instance is null, the same data sitting in the Request.Form.Files also needs to be checked. Put the API key to access to the Vision API. The VisionServiceClient actually returns the image analysis result, which is included to the JSON response.

We’ve completed development on both front-end and back-end sides. Let’s run this app and access it from our mobile device. The following video clip shows how iPhone takes a photo, sends it to the app, and gets the result.

So far, we’ve briefly looked at Azure Cognitive Services – Vision API for OCR implementation. In fact, depending on the original source images, the analysis quality varies. In the video clip above, the result is very accurate. However, if there are outlines around the text, or contrast between text and its background is very low, the quality significantly drops. In addition to this, CAPTCHA-like images don’t return satisfactory results. Once after Cognitive Services performs enough learning with substantial number of sources, the quality becomes high. It’ll be just matter of time.