I recently was tasked with deploying two Fortinet FortiGate firewalls in Azure in a highly available active/active model. I quickly discovered that there is currently only two deployment types available in the Azure marketplace, a single VM deployment and a high availability deployment (which is an active/passive model and wasn’t what I was after).

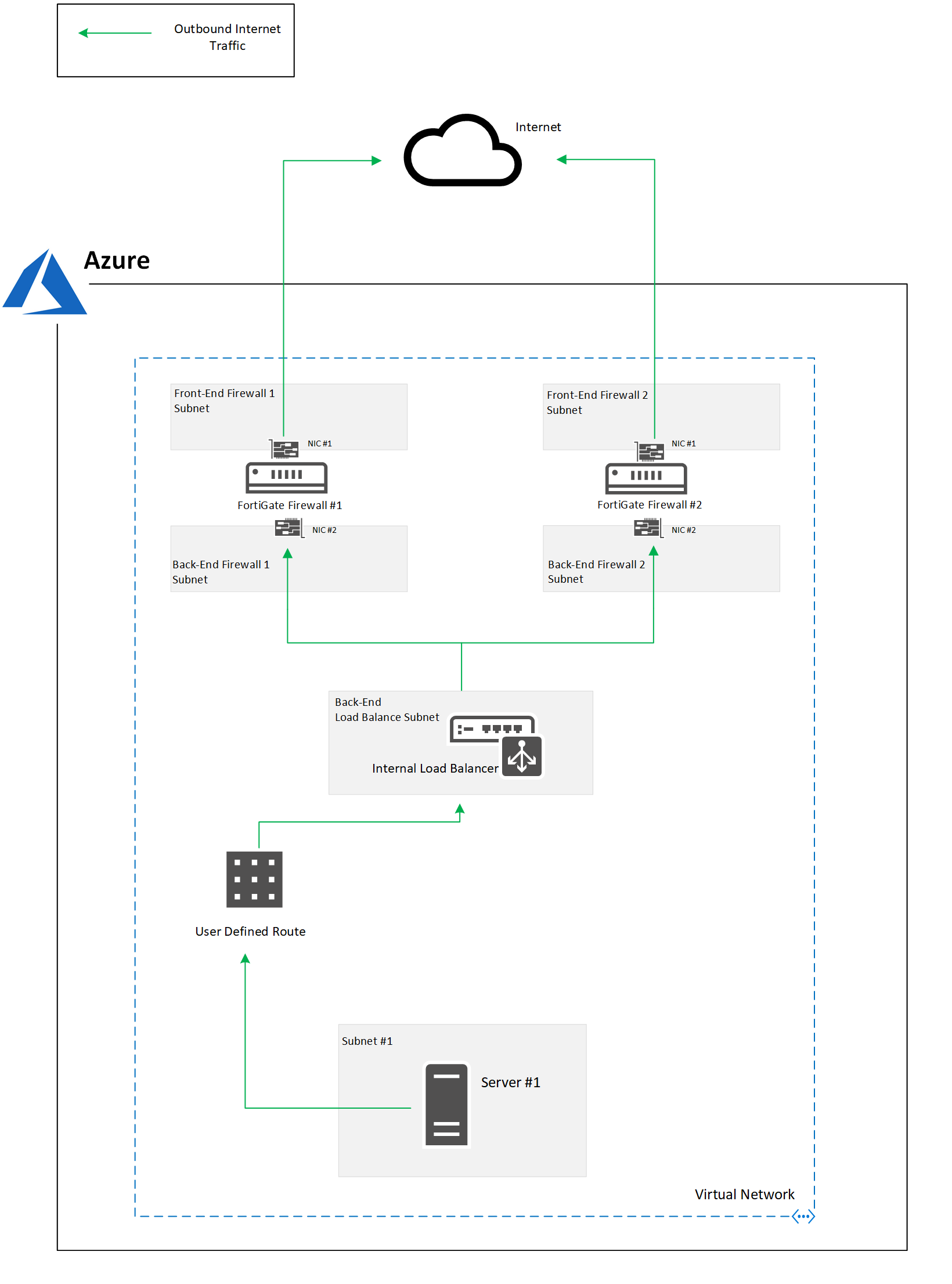

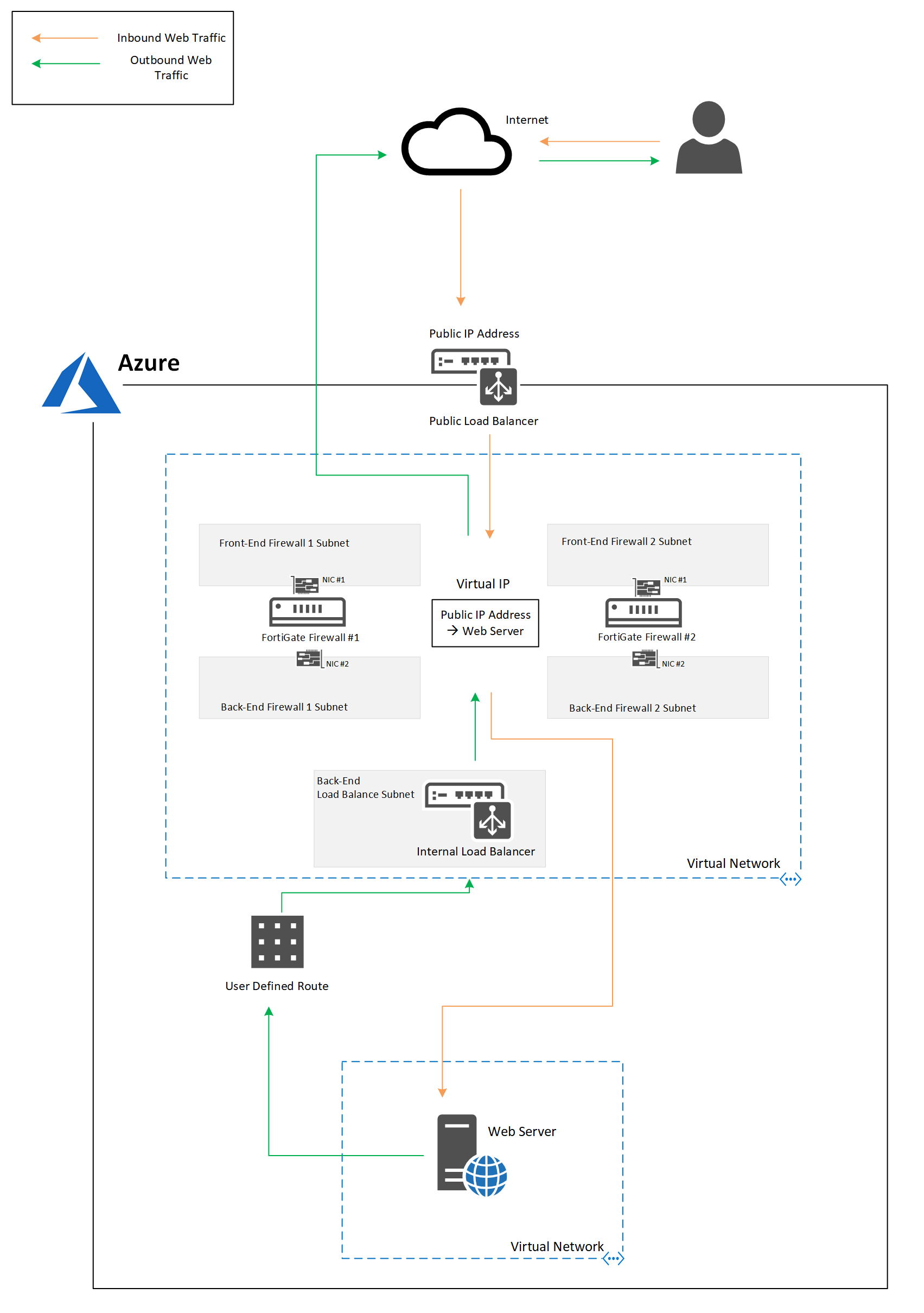

I did some digging around on the Fortinet support sites and discovered that to you can achieve an active/active model in Azure using dual load balancers (a public and internal Azure load balancer) as indicated in this Fortinet document: https://www.fortinet.com/content/dam/fortinet/assets/deployment-guides/dg-fortigate-high-availability-azure.pdf.

Deployment

To achieve an active/active model you must deploy two separate FortiGate’s using the single VM deployment option and then deploy the Azure load balancers separately.

I will not be going through how to deploy the FortiGate’s and required VNets, subnets, route tables, etc. as that information can be found here on Fortinet’s support site: http://cookbook.fortinet.com/deploying-fortigate-azure/.

NOTE: When deploying each FortiGate ensure they are deployed into different frontend and backend subnets, otherwise the route tables will end up routing all traffic to one FortiGate.

Once you have two FortiGate’s, a public load balancer and an internal load balancer deployed in Azure you are ready to configure the FortiGate’s.

Configuration

NOTE: Before proceeding ensure you have configured static routes for all your Azure subnets on each FortiGate otherwise the FortiGate’s will not be able to route Azure traffic correctly.

Outbound traffic

To direct all internet traffic from Azure via the FortiGate’s will require some configuration on the Azure internal load balancer and a user defined route.

- Create a load balance rule with:

- Port: 443

- Backend Port: 443

- Backend Pool:

- FortiGate #1

- FortiGate #2

- Health probe: Health probe port (e.g. port 22)

- Session Persistence: Client IP

- Floating IP: Enabled

- Repeat step 1 for port 80 and any other ports you require

- Create an Azure route table with a default route to the Azure internal load balancer IP address

- Assign the route table to the required Azure subnets

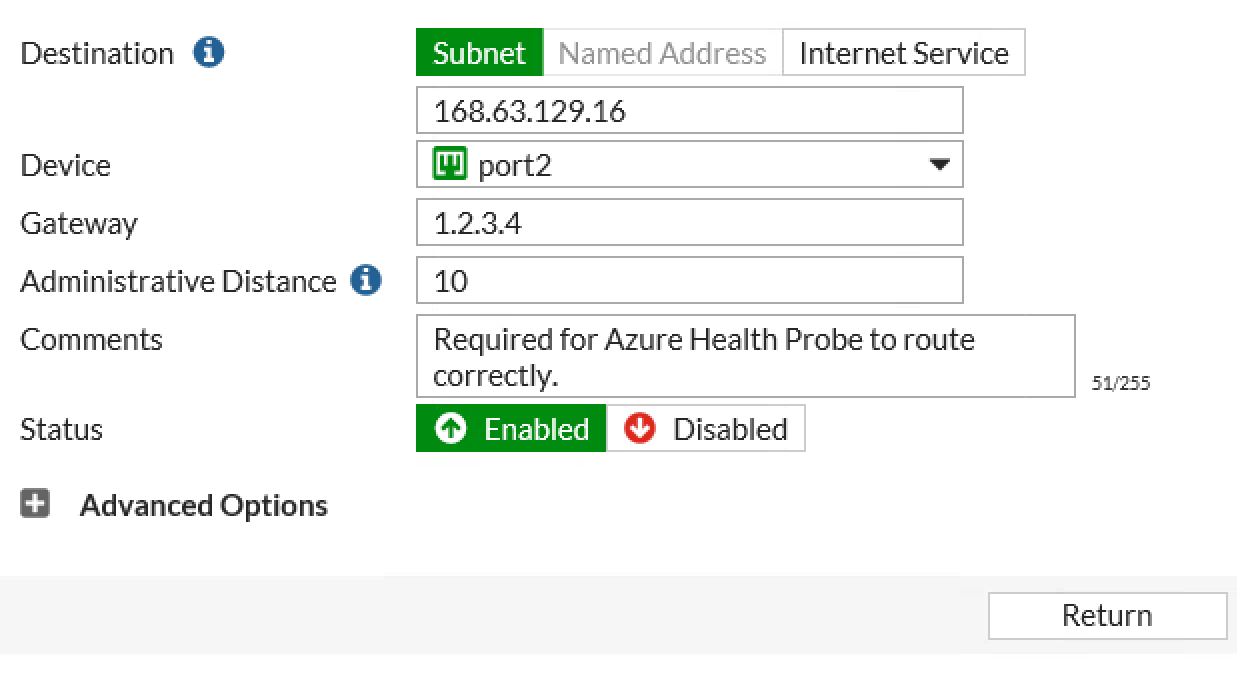

IMPORTANT: In order for the load balance rules to work you must add a static route on each FortiGate for IP address: 168.63.129.16. This is required for the Azure health probe to communicate with the FortiGate’s and perform health checks.

Once complete the outbound internet traffic flow will be as follows:

Inbound traffic

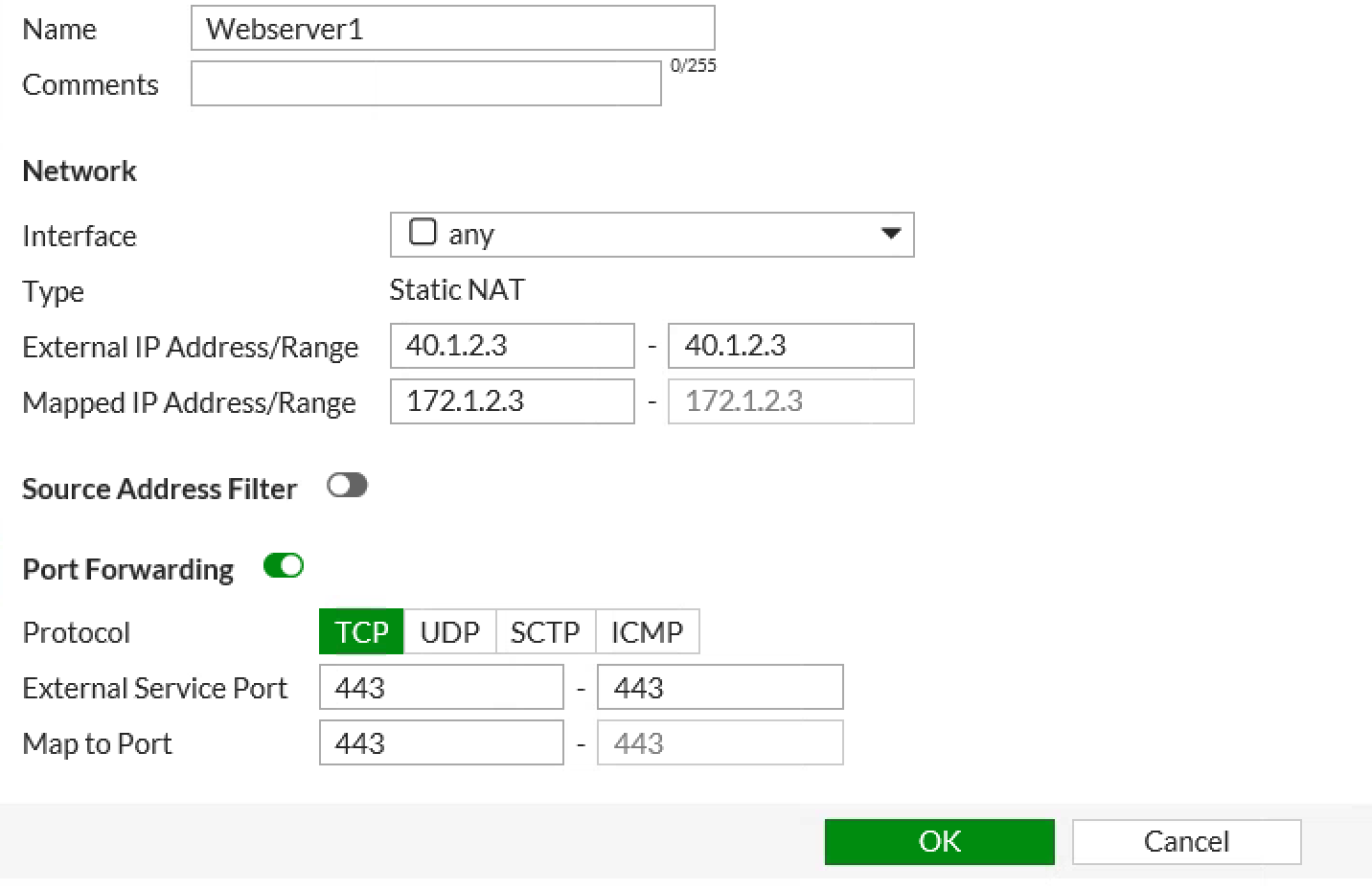

To publish something like a web server to the internet using the FortiGate’s will require some configuration on the Azure public load balancer.

Let’s say I have a web server that resides on my Azure DMZ subnet that hosts a simple website on HTTPS/443. For this example the web server has IP address: 172.1.2.3.

- Add an additional public IP address to the Azure public load balancer (for this example let’s say the public IP address is: 40.1.2.3)

- Create a load balance rule with:

- Frontend IP address: 40.1.2.3

- Port: 443

- Backend Port: 443

- Backend Pool:

- FortiGate #1

- FortiGate #2

- Session Persistence: Client IP

- On each FortiGate create a VIP address with:

- External IP Address: 40.1.2.3

- Mapped IP Address: 172.1.2.3

- Port Forwarding: Enabled

- External Port: 443

- Mapped Port: 443

You can now create a policy on each FortiGate to allow HTTPS to the VIP you just created, HTTPS traffic will then be allowed to your web server.

For details on how to create policies/VIPs on FortiGate’s refer to the Fortinet support website: http://cookbook.fortinet.com.

Once complete the traffic flow to the web server will be as follows:

Hi, we had a simular deployment but run into a problem of the Public loadbalancer to SNAT the outboud traffic from the Fortigates randomly from a pool of public IP. Which was undesirable for email en whitelisted SaaS outbound application traffic. Just found out that we need to change a public loadbalancing option somewhere: https://github.com/MicrosoftDocs/azure-docs/blob/master/articles/load-balancer/load-balancer-outbound-connections.md

As in

“loadBalancingRules”: [

{

“disableOutboundSnat”: false/true

}

]

Did somebody run into this and do you know where and how to do this?

Thanks

JP

Hi Jan,

We didn’t run into this issue but my understanding is to be able to utilise the disableOutboundSnat feature you need to be using the Azure Standard Public Load Balancer which is currently in preview (https://docs.microsoft.com/en-us/azure/load-balancer/load-balancer-standard-overview).

You will then be able to create a load balance rule with the disableOutboundSnat feature. PowerShell command to create the rule can be found here: https://docs.microsoft.com/en-us/powershell/module/azurerm.network/new-azurermloadbalancerruleconfig?view=azurermps-4.4.1

Hope that helps.

Thanks,

Usefull.

But did you actually make it work? As we still are running into an issue which I think needs the UDR rewriting capabilities in the upcoming FOS 6.5.4.:

”The issue is the public ip addresses are natted/forwarded to an IP address on the external private range on the fortigate, which uses a Virtual IP to translate it to the correct internal hosts or internal loadbalancer frontend IP after which the internal loadbalancer moves the traffic to the required end system.

When traffic OUTbound is started from the internal machines, it should point to the internal loadbalancer associated with the fortigate cluster, with a preference to the active firewall and not random selected!

The outbound traffic passes through the Fortigate, runs to the external loadbalancers internal IP address (the default gateway of the fortigates) and should be source natted to the address used for the inbound traffic also, this only works if the fortigate sourcenats to the private ip address assigned to the public addresses!

When using 2 fortigates for HA, they can NOT use the same incoming ip addresses on the public facing (WAN) interface as it causes duplicated addresses as this is an active/active setup) so the issue is the Incoming traffic when the primary fortigate fails, must be rerouted to different ip addresses which are configured on the second fortigate as VIP addresses.

(example: azure pub address 50.10.1.1 -> 10.35.1.11 on fw1 wan1 range (10.35.1.x/24) Fortigate1 VIP 10.35.1.11 -> 10.31.2.70 (internal host), on FW2 we use 10.35.1.110 as VIP to point to 10.31.2.70 to avoid duplicate ip address issues.

So Azure must detect FW1 to fail and then reroute the 50.10.1.1 to 10.35.1.110 in order to reroute through FW2!

Outbound traffic hidden behind 10.35.1.110 must then be hidden behind 50.10.1.1. (in case FW1 is active that will be 10.35.1.11”

Any thoughts?

Just an FYI the Azure standard load balancer is now GA and solves the NAT issue. I have switched over the load balancer and using a LBR with HA ports and floating IP enabled and all works correctly. Much more elegant solution than creating NAT Pools.

aah I understand your issue and feel your pain as I went through the same thing. My suggestion if you can is to wait for the next OS upgrade as you mentioned.

I did get it working in the end using a combination of NAT IP Pools and UDRs to control the traffic, it is kind of hard to explain but I’ll give it a shot.

– Allocate two NAT IP pools one for each firewall (e.g. 10.35.5.0/25 -> FW1 and 10.35.5.128/25 -> FW2)

– On each FortiGate add an IP Pool with a one to one mapping to the correct NAT IP Pool (e.g. FW1: 10.35.5.5 and FW2: 10.35.5.133)

– On each FortiGate add a VIP to map the NAT IP Pool address to the destination server (e.g. FW1 VIP: 10.35.5.5 -> 10.31.2.70 and FW2 VIP: 10.35.5.133 -> 10.31.2.70)

– Create UDRs for accessing the NAT IPs so that requests for FW1 NAT IP Pool direct to FW1 and request for FW2 NAT IP Pool direct to FW2, bypassing the internal load balancer (e.g. UDR-FW1: 10.35.5.0/25 -> FW1 Backend IP Address and UDR-FW2: 10.35.5.128/25 -> FW2 Backend IP Address)

As health probe IP for both Internal & External Loadbalancer is same (168.63.129.16) and both internal & External LB is pointing to same set of Firewall(Untrust nic->external LB,Trust nic ->Internal LB) ,here how you will configure Health probe inside Fortigate pointing 168.63.129.16 -> Next Hop??? .

Facing same issue. Wondering if you found an answer to this in the meantime?

Facing same issue. What is the next hop for the 168.63.129.16 for the health probe? We are seeing the health probe traffic coming in the external side of the Fortigate but cannot get the health probe traffic back. Any suggestions?

Hi Steve,

The next hop for the health probe should be the IP address of the gateway for the Azure subnet of the external/untrust subnet. E.g. if your external facing interface sits in an untrust/external subnet of 10.7.0.0/24, the Azure gateway IP address for that subnet will be 10.7.0.1.

Hope that helps.

Will this work for all internal private ports 1-65535 (for internal traffic) or is this aimed at mainly external web 443/80 ports. I thought the internal LB would only accept specified ports manually not dynamically any, I know there was mention of a Standard Internal LB which may allow this, would this work?

Hi Eilesh, the Azure Internal Load Balancer is a layer 4 device that supports all internal ports. The challenge with any firewall appliance is the load balancer. Unfortunately its a manual process to configure rules for each port that needs to be load balanced. Although, once that port is added, you dont need to add it again as theres no source to worry about, rather, just the destination.

Hi Eilesh,

The Standard Internal Load Balancers have an option when creating load balancing rules where you can enable “HA Ports” which will load balance all private ports.

The issue Lucian was eluding to is this is not currently possible on public load balancers and when load balancing traffic from the internet you will need to manually add a load balancer rule for each port you would like to load balance.

Thanks for updates, still wanted some clarify AJ if possible. (this is mainly for internal traffic using FW appliances in Azure)

If we use the Standard Azure Internal LBs and enable HA ports can we now:

1) set our UDR rules for force all traffic from all subnets to the > Internal LB(with HA ports enabled) and this will then allow all ports 0 to 65535 to travel through it?

The Internal LB will then be attached to, say, 2 or more FW Appliances, to give us a Active/Active FW setup?

e.g. Traffic from say a VM is forced to go via the Standard Int. LB > then hits one of the FW appliance (will the appliances see all the traffic being sent/received?)

2) If that’s true, then potentially any FW Appliance can how be set up in Azure(previously no possible) using an Active / Active setup e.g. Barracuda, CheckPoint, Fortinate or other Azure FW Appliances etc is that the case?

My general experience with both Barracuda, Checkpoint, PaloAlto appliance in the Cloud, is that they all depend a API scripts for true HA which switches using a Active/Passive state using an Application API registration to be able to change UDR IP from primary to secondary in event of failover.

3) What I am seeking clarity on if this is no longer required, if the newer Std Azure Internal LB (with the HA enable option) can bypass this App Registration/script requirement and use any FW appliance in a Active/Active set up UDR’d to just the Std Azure Int. LB ?

I am hoping the above make sense, as after asking the same to both Microsoft and many of the FW appliance providers I never really got a definitive answer on this. My biggest issue with the Active/Passive is the client pays for two firewall, one of which is hardly ever use unless failed over, it relies on the API registration to allow it access to change UDR setting in event of DR (It works but its not ideal and there is always some downtime as UDR rules change) If we can always set the UDR to the IntLB and force all ports through this then Active/Active without any other script/API calls would be great

Yes that is correct you can now use the Azure Standard Load Balancer with a UDR to force all outbound traffic out of two NVAs in an active/active scenario.

I just recently did this exact same thing with two Juniper vSRX’s in Azure.

AJ That’s great to hear, were there any downsides or additional configuration that was required to avoid things like Asymmetric Routing etc..

There was definitely challenges in getting this working and I’ve found each NVA product I’ve worked with requires something different.

The Fortinet’s for example had the ability to enable “session-sync” between both devices so they were aware of each others sessions even though they acted as individual appliances.

With the Junipers there was no such features and had to configure a combination of SNAT and UDR’s to ensure the traffic destined to a DMZ web server routed back the same way.

Is there a configuration your willing to share to get this working on e.g. the Fortinet or Junipers appliances, that would help us do a POC please, a template or steps would be great help. Thanks

AJ also, do any of the vendors out there recognise this new option, as most of them still state to use the API script call methods for active/passive in there docs and don’t appear to support this newer method yet, that I can see anyway? Does Juniper specifically support this in Azure?

I cant speak for the vendors but I imagine it would take some time to package and make available on the Azure marketplace a new version of their product. Considering standard LBs are released recently I wont be surprised if this took some more months before anything is made available.

GitHub is always worth a look and is my go to.

AJ, have the new Standard Load Balancers changed things enough that the architecture described in the original post is no longer optimal?

Is it still necessary, for instance, to build the two Fortigates on two different subnets, when the UDRs will point all traffic to the LB addresses anyway?

It looks like Fortinet’s own active-passive HA template has actually been removed from Azure. As usual, things are changing so fast it’s hard to keep up.

Hi Rob,

I do agree things do move fast nowadays and its hard to keep up.

Last I checked the FortiGate’s required their own individual routes in order to route traffic correctly which is why you needed multiple subnets, its quite possible this is no longer a requirement.

If you are considering deploying an NVA in Azure i would recommend waiting (if you can) and see what Azure firewall can brings to the table before going to a product of choice.

Hi,

How can we achieve active-passive HA fortigate firewall without load balancer on azure ?