UPDATE 10/02/2017

Ok, so sorry everyone, I’ve been a bit slack with this one and Microsoft have made some significant changes in this space since I blogged on it. I thought it best to get this page updated so anyone who googled it would have current info!

Firstly, Microsoft have changed the BLOB they give you for the ingestion service to write once. This of course means if you don’t place things in the right location (folder for example) it’s not going anywhere! While a little inconvenient, I think it’s still thoroughly acceptable, they are still giving you unlimited BLOB storage at no cost for you to upload and ingest your PST’s. While I have read other blogs which have talked about signing up for your own blob (which will work really well with my original blog listing) I’m not sure it’s really necessary for the majority of customers as it will incur cost.

Also, I found that the old ‘Azure Storage Explorer 6’ wasn’t able to interact with the new BLOB so you couldn’t visualise the uploads with a GUI. Well they have released a new version in preview with the 6 dropped that can be downloaded here instead, oh and it’s really straight forward to use!

Finally Microsoft now recommend using the GUI and I must admit it, overall, it works pretty well. I used it for a recent project and I found it far superior to what it was originally. So I found I automated the AzCopy commands and the CSV generation but then I loaded the CSV and ran this import via the GUI. If anyone wants to see some examples, leave some comments below and I’ll write a new blog on it in the coming weeks! Original blog below…

If you’ve been involved in an Exchange Migration to Office 365 of late, I’m sure you’ll be well aware of the new Microsoft Office 365 Import Service. Simply put, give them your PST’s via hard disk or over the network, and they’ll happily ingest them into the mailbox of your choice – what’s not to like! If you’ve been hiding under a rock, the details are available here!

Anyway, rather than marvelling at the new Microsoft offering, I thought I’d share my recent experience with the network route option. When I first started playing [read testing] I soon noticed that the Portal GUI was, well, struggling to keep up with the requests. So instead, I reached out to MS and found that this is all possible programmatically using PowerShell!

So first of all, you want to get the files into an Azure Blob. That’s achieved via AzCopy which is available on this link. Then you’ll want to grab the Azure Storage Explorer, granted, you could probably do this all via PowerShell, but when there is a good GUI sometimes it’s just easier, it’s available here.

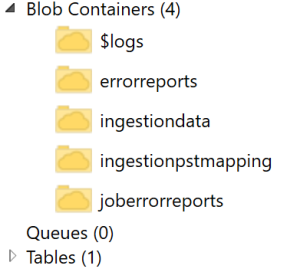

When you fire up the Storage Explorer, you’re looking for the Storage Key. This is available via the Portal | Admin | Import. From there, you’ll see a key icon which is referred to as ‘Copy Office 365 Storage Key and URL‘. Once you’re connected you should see some default folders which I have screenshot below…

Select one and click Security, select the Shared Access Key tab and generate Signature. So now we’ve got the necessary items, we can plumb them into variable as I’ve demonstrated below…

[code language=”powershell” firstline=”1″]

cd "C:\Program Files (x86)\Microsoft SDKs\Azure\AzCopy"

$Source = "\\Server\Share"

$Destination = "https://55f6dd****49d9a5bcf36.blob.core.windows.net/ingestiondata/"

$StorageKey = "9XAsHc/OmkZgdrSXkE+***+njq9qQWvt*******rXysz//CvB2j6mlb6XSJgi4bfh+Br6Onn*****AEVtA=="

$LogFile = "C:\Users\dyoung\Documents\Uploads.log"

& .\AzCopy.exe /Source:$Source /Dest:$Destination /DestKey:$StorageKey /S /V:$LogFile

[/code]

This will grab all the PST’s from the \ \Server\Share UNC path and look to upload them to the ingestiondata directory. There is a log file which is pretty useful and the PowerShell window will let you know once the upload is complete.

Now we’ve got our PST’s up in Azure, but we need to let MS know where to ingest them. For this, we need to generate a CSV. When I was creating the PST names I found it good to name them ADDisplayName.pst, simply so we can automate the CSV creation. I also added incremental numbers at the end of each file, as it’s possible to insert multiple PST’s to a single mailbox…

e.g. ‘Dave Young.pst‘, ‘Dave Young1.pst‘ and ‘Dave Young2.pst‘ would all be imported into Dave Young’s mailbox.

I also decided to import to the users archive mailbox (as I’ve already given the users an archive) and I wanted the PST’s in the root directory…these options are all covered in the TechNet article here…

[code language=”powershell” firstline=”1″]

$FilePath = "/"

$AllPSTs = Get-ChildItem ‘\\Server\Share’ -filter "*.pst"

$CSVTable= @()

for($Cycle=0; $Cycle -lt $AllPSTs.name.Count; $Cycle++)

{

$DisplayName = [io.path]::GetFileNameWithoutExtension($AllPSTs.name[$Cycle])

if ($DisplayName -match "[1-9]")

{

$DisplayName = $DisplayName -replace ".$"

}

$SMTPAddress = $null

$Mailbox = Get-Mailbox $DisplayName

$SMTPAddress = $Mailbox.PrimarySmtpAddress.ToString()

$SMTPAddress = $SMTPAddress.ToLower()

$AzureBSAccountUri = ‘https://55f6ddf********a5bcf36.blob.core.windows.net/ingestiondata/’+$AllPSTs[$Cycle].Name

$CSVColumn = New-Object PSObject

Add-Member -InputObject $CSVColumn -MemberType NoteProperty -Name ‘Workload’ -Value ‘Exchange’

Add-Member -InputObject $CSVColumn -MemberType NoteProperty -Name ‘FilePath’ -Value $FilePath

Add-Member -InputObject $CSVColumn -MemberType NoteProperty -Name ‘Name’ -Value $AllPSTs.name[$Cycle]

Add-Member -InputObject $CSVColumn -MemberType NoteProperty -Name ‘Mailbox’ -Value $Mailbox.UserPrincipalName

Add-Member -InputObject $CSVColumn -MemberType NoteProperty -Name ‘AzureSASToken’ -Value ‘?sv=2014-02-14&sr=c&sig=2dSSbF9CsQ******HpwHOJy4pmGFvsniv46hZh%2BA%3D&st=2016-02-01T13%3A30%3A00Z&se=2016-02-09T13%3A30%3A00Z&sp=r’

Add-Member -InputObject $CSVColumn -MemberType NoteProperty -Name ‘AzureBlobStorageAccountUri’ -Value $AzureBSAccountUri

Add-Member -InputObject $CSVColumn -MemberType NoteProperty -Name ‘IsArchive’ -Value ‘TRUE’

Add-Member -InputObject $CSVColumn -MemberType NoteProperty -Name ‘TargetRootFolder’ -Value ‘/’

Add-Member -InputObject $CSVColumn -MemberType NoteProperty -Name ‘SPFileContainer’ -Value ”

Add-Member -InputObject $CSVColumn -MemberType NoteProperty -Name ‘SPManifestContainer’ -Value ”

Add-Member -InputObject $CSVColumn -MemberType NoteProperty -Name ‘SPSiteUrl’ -Value ”

$CSVTable += $CSVColumn

}

$CSVTable | Export-Csv "PstImportMappingFile-$((Get-Date).ToString("hh-mm-dd-MM-yy")).csv" -NoTypeInformation

[/code]

Hopefully the output of this script is a pretty decent csv. Now it’s time to create the import jobs. Now you might be wondering, there is no ‘New-o365MailboxImportRequest‘ cmdlet and you’d be right. I’ve used a suffix so I could load both on-premises and cloud cmdlets into the same PowerShell session, it’s achieved by ‘Import-PSSession $Session -Prefix o365′ which is described in point 2 here

[code language=”powershell” firstline=”1″]

Add-Type -AssemblyName System.Windows.Forms

$FileBrowser = New-Object System.Windows.Forms.OpenFileDialog -Property @{

InitialDirectory = [Environment]::GetFolderPath(‘MyDocuments’)

Filter = ‘CSV (*.csv)|*.csv’

}

[void]$FileBrowser.ShowDialog()

$FileBrowser.FileNames

Import-Csv $($FileBrowser.SafeFileName) | foreach {New-o365MailboxImportRequest -Mailbox $_.Mailbox -AzureBlobStorageAccountUri $_.AzureBlobStorageAccountUri -BadItemLimit unlimited -AcceptLargeDataLoss -AzureSharedAccessSignatureToken $_.AzureSASToken -TargetRootFolder $_.TargetRootFolder -IsArchive}

[/code]

These jobs will be in PowerShell and they can of course be checked using the cmdlets ‘Get-MailboxImportRequest’ & ‘Get-MailboxImportRequestStatistics’.

And that’s pretty much it, yes it was quick but I reckon it’s pretty self explanatory and it’s considerably more efficient than doing it via the GUI – Enjoy!

Cheers,

Dave

Thanks a ton for sharing this–this it the only thing I’ve found about how to automate O365 email ingestion. We have several thousand mailboxes, so getting the new-mailboximportrequest to work will save a lot of busy work. I am getting a (403) Forbidden error from the New-MailboxImportRequest command. My SASToken works in a brower (I’ve tried a toke both for the directory and for a specific file). Let me know if you have any ideas about what may be causing it.

No problem Tony, glad it was of use. (403) Forbidden is nearly always the SASToken, we have found this expired fairly regularly and if you renew it, it will break all existing active jobs. You can update the existing jobs using Set-o365MailboxImportRequest -AzureSharedAccessSignatureToken…hope this helps!

Hello,

I have imported a session for O365 and can access quite a few new cmdlets, such as New-O365Mailbox, however the New-o365MailBoxImportRequest doesn’t seem to be available.

As I am not part of the team that normally manages the Exchange / O365 tenant I potentially see this as a case of my account missing access rights, or in the other part of my mind it may be because the tenant doesn’t have this functionality yet. Apart from directly engaging Microsoft support, are there any gotchas that potentially could have simply gotten the best of me?

I did receive the following warning:

WARNING: Your connection has been redirected to the following URI: “https://ps.outlook.com/PowerShell-LiveID?PSVersion=3.0 ”

WARNING: The names of some imported commands from the module ‘tmp_gofezbbg.dfv’ include unapproved verbs that might make them less discoverable. To find the commands with unapproved verbs, run the Import-

Module command again with the Verbose parameter. For a list of approved verbs, type Get-Verb.

Unfortunately, the output is still as follows:

New-o365MailboxImportRequest : The term ‘New-o365MailboxImportRequest’ is not recognized as the name of a cmdlet

Import:

Import-PSSession $Session -Prefix o365

Hi Nicke, that is strange as it certainly works without a problem for me. If you load up ISE and type New-o365 it will then bring up all the available commands, and New-o365MailboxImportRequest should be there. I have noticed that with the prefix of o365, some people have gone with a zero rather than a O, which of course won’t work.

Well, I raised a request with MS regarding this and was told that the cmdlet is not available. My guess is that this isn’t deployed to everyone.

Yeah, I can see multiple other New-O365 cmdlets – so I hope I got the spelling right.

In order to see those cmdlets, your account needs to be a member of organization management as well as having the ‘mailbox import/export’ and ‘mailbox-search’ roles added to your account/group.

Yeah, I noticed that. Back on track again. A bit surprised that the O365 Dev support was so strongly saying that this cmdlet wasn’t available and was caught off guard by that

This has been an awesome resource so far. Thank you! I have been able to follow all of the steps and have reached the last portion of the blog, but am running into some issues. New-MailboxImportRequest doesn’t seem to like the URI I have supplied. I have constructed the AzureBlobStorageAccountUri like such:

PowerShell: ($AzureBlobStorageAccountUri + $row.FilePath + “/” + $row.Name)

Resulting String: https://XXXX.blob.core.windows.net/ingestiondata/username/psts/Archive001.pst

and all of the other relevant cmdlet parameters have also been supplied. When I actually kick off the cmdlet though I get 404 Not Found. If I connect to the ingestiondata storage container via PowerShell, I can see the blob in question in the ingestiondata container, but attempting to perform a read operation and/or delete operation both fail with a 404 Not Found.

What kind of issue am I facing? Is this an access/permissions issue? Is the container not set up correctly?

Any insight is appreciated.

Hi,

I’m trying to use this procedure, but the SAS key provided by Microsoft doesn’t have read access to the Azure storage container. I can upload and enumerate files, but I can’t download files and I get the following error when I run New-MailboxImportRequest: “Unable to open PST file ‘https://xxxx.blob.core.windows.net/ingestiondata/xxxx.pst’. Error details: The remote server returned an error: (404) Not Found.”

I’m able to use New-MailboxImportRequest with my own Azure storage account where I have full access, but not with the ingestiondata container and SAS key provided by Microsoft.

Has Microsoft changed the permissions on the SAS key they give you? Does this process still work?

Thanks!

Hi All,

I’ve found the SAS key’s will expire, so you need to grab them on a relatively regular basis.

For the 404 not found, I would recommend using Azure Storage Explorer to confirm that the path is valid!

Cheers,

Dave

I noticed the same thing as Trevor.

Tested with explorer and I can’t even download the files. So the token appears to be write only…

Maybe csv is the only way for now…

Hi Markus,

I actually used this procedure this morning for some further ingestion’s and it still worked, so I don’t believe anything has changed at MS’s end to break the procedure.

Cheers,

Dave

Hi Dave.

Could you please elaborate how exactly did you split the sas url that MS is giving to accounturi and sas token. I tried multiple ways but could not get it to work. I guess the problem has something to do with that…

-Markus

Dear Dave,

I’m trying to find out how to delete the PST files now that I’ve completed the import process. Not sure if its just me but I feel the need to do a bit of post-import cleanup and I’d rather not leave PST files sitting there. Tried deleting via the Azure Storage Explorer GUI but it keeps saying “Resource not found. Do you have the required permissions?” If the SAS key is for write only then that explains the error message. But that still leaves me with trying to figure out how to delete those PST files.

The pst files will delete after 60 days. You can’t delete them manually.

If you are concerned about privacy, you can overwrite your existing psts in ingestiondata with empty files, though…

Hi Markus, do you Azure Storage Explorer setup? If so can you provide an example of one PST that you are trying to import into one mailbox? e.g. the line in the CSV, the location of the file in BLOB storage etc?

Cheers,

Dave

Hi Dave.

MS nowadays only gives out single SAS url instead of accounturi and sharedaccesstoken. Looks like this (not a real one): https://925b8kh8b9er24y98dfd539.blob.core.windows.net/ingestiondata?sv=2012-02-12&se=2016-07-17T08%3A11%3A25Z&sr=c&si=IngestionSasForAzCopy201606161313568527&sig=R9dYiHX1ATBj7BVUDhRScHGCrtEfsTYuB4sdFnfRX%2BE%3D

With azcopy:

azcopy /source:C:\temp2\pst /dest:/dest:”https:…..” works.

Obviously the first part before “.blob” is the account uri, and the part after “&sig=” is the token. %2B and %3D resolve to + and = respectively which seems like a proper token(at least it is the same length).

Using Azure storage explorer (linked in this blog) I receive 403 forbidden. BUT if I use http://storageexplorer.com/ (I think msdn or smth led me there) I can connect using the full SAS URL and see the uploaded files. BUT I cannot download or delete them: “Resource not found. Do you have the required permissions?” The only explanation I can think of is that the account is write only.

For the same reason new-mailboximportrequest gives 403. I have tried different ways of providing “-AzureSharedAccessSignatureToken”, only the part after “&sig=”, everything after and including “?sv=” but no dice.

Do you, Dave, know of some way to still today get the url and token separately as my azure page only the full SAS URL…

Hopefully my explanation is clear enough…

Hi Kevin,

I just deleted a PST there using the Azure Storage Explorer. I clicked on ingestiondata in the left pane, then selected the PST from the right and clicked on the Delete button along the top ribbon…clicked yes to the prompt and the PST was gone. Is this not what you see?

Cheers,

Dave

Dear Dave,

Thank you for your reply. When trying to delete a PST file via the Azure Storage Explorer as you described, I get a “Resource not found. Do you have the required permissions?” message. I did a refresh and found that the file is still there so I don’t think the “Resource not found” text applies and maybe its some sort of permission issue though I’m already the Global Admin in Office 365 and I have administrative rights in Azure.

Best Regards,

Kevin

Hi Kevin,

Where did you get the Storage Key? I noticed that MS have changed the web page, it used to let you copy it like it’s been referenced on the blog…

http://reduktor.net/2015/06/importing-outlook-psts-into-office-365/

Cheers,

Dave

We are having the same issue. I have a ticket open for the past 3 weeks with MS.

MS has stated that this is a bug and there currently is no way to download or delete the files.

Hi Nick,

Great update, thanks for the info!

Cheers,

Dave

Please I would like to know how to, if anyone succeeds in getting past the ..”Resource not found. Do you have the required permissions?” message

Thanks!

Hello,

I cannot find any details on Microsoft sites about the bug. I’m having the same errors.

For the time being I did overwrite the files with empty text files.

Marcel

Marcel, Thanks for the suggestion about overwriting with empty text files. I just did that and feel more comfortable. I’m getting the same error when trying to delete the files. This is a decent workaround.

You can try using Clumsy Leaf’s Cloud Xplorer. This has enabled me to delete the uploaded PSTs.

http://clumsyleaf.com/products/cloudxplorer

Whats not to like?

That you cant delete, download, or manage what ever you upload, confirmed bug by MS with not eta to be fixed.

Hi Dave,

Can you confirm that that using the new-mailboximportrequest is still valid with O365? I have tried every combination but am still getting the (404) Not found. I have verifies the case, as well as the path in Azure Explorer. Any ideas?

Thanks

Hi Chabango,

I’ve just updated the blog as MIcrosoft have made some changes since I originally authored the blog. Hope this helps!

Cheers,

Dave

Microsoft had a ticket open with me and have comment that they officialy do not support the new-mailboximport request command in office 365. This is what the told me specifically.

“This command is unequivocally not supported. If you are using the PST Import service to upload PSTs, you need to use the UI to manage the imports.”

It would have been easier if they just removed the command from the snap-in.

James