First published at https://nivleshc.wordpress.com

Introduction

What if I told you that you could get rid of most of your servers, however still consume the services that you rely on them for? No longer will you have to worry about ensuring the servers are up all the time, that they are regularly patched and updated. Would you be interested?

To quote Werner Vogel “No server is easier to manage than no server”.

In this blog, I will show you how you can potentially replace your secure ftp servers by using Amazon Simple Storage Service (S3). Amazon S3 provides additional benefits, for instance, lifecycle policies which can be used to automatically move older files to a cheaper storage, which could potentially save you lots of money.

Architecture

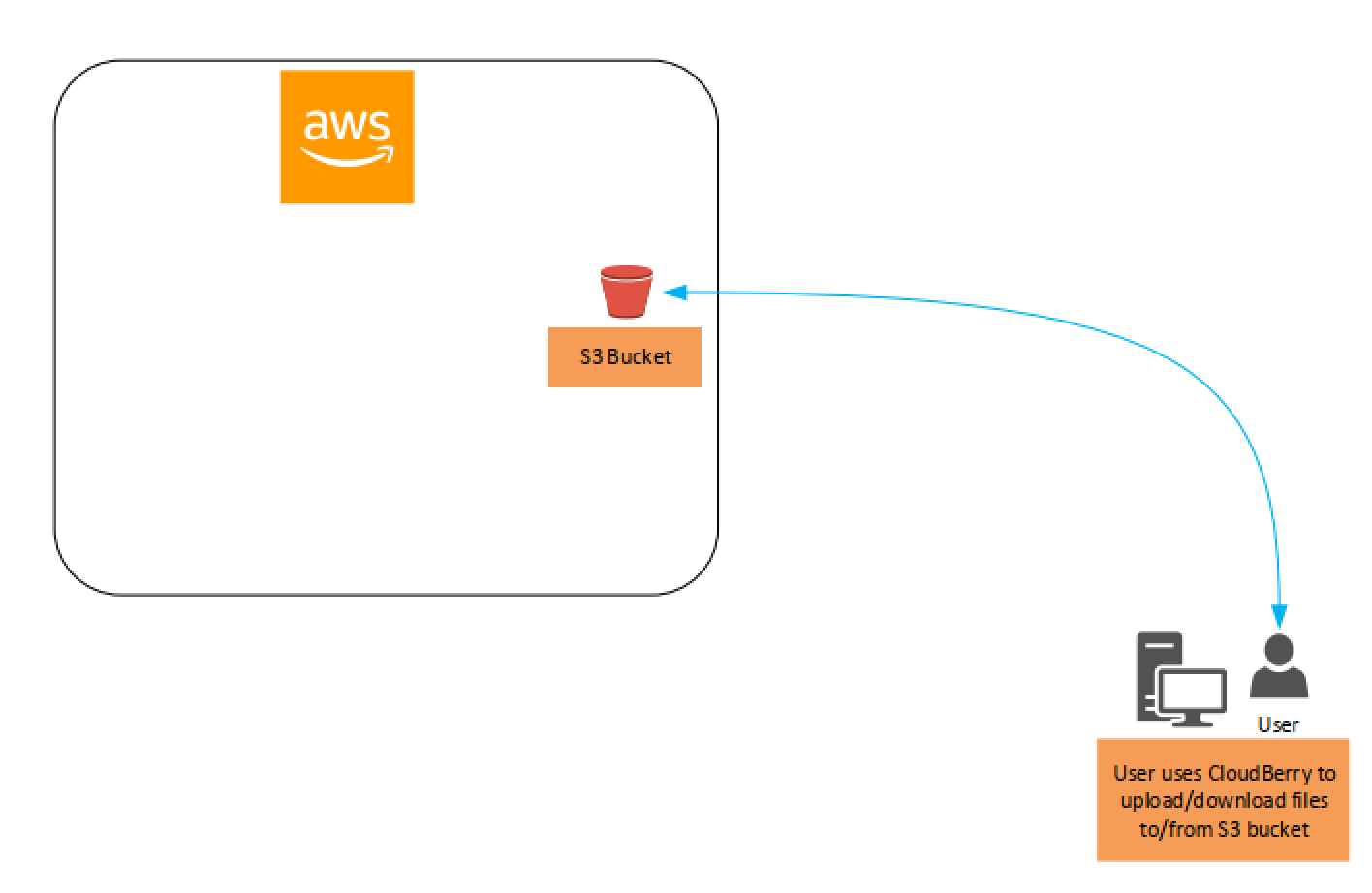

The solution is quite simple and is illustrated in the following diagram.

We will create an Amazon S3 bucket, which will be used to store files. This bucket will be private. We will then create some policies that will allow our users to access the Amazon S3 bucket, to upload/download files from it. We will be using the free version of CloudBerry Explorer for Amazon S3, to transfer the files to/from the Amazon S3 bucket. CloudBerry Explorer is an awesome tool, its interface is quite intuitive and for those that have used a gui version of a secure ftp client, it looks very similar.

With me so far? Perfect. Let the good times begin 😉

Lets first configure the AWS side of things and then we will move on to the client configuration.

AWS Configuration

In this section we will configure the AWS side of things.

- Login to your AWS Account

- Create a private Amazon S3 bucket (for the purpose of this blog, I have created an S3 bucket in the region US East (North Virginia) called secureftpfolder)

- Use the JSON below to create an AWS Identity and Access Management (IAM) policy called secureftp-policy. This policy will allow access to the newly created S3 bucket (change the Amazon S3 bucket arn in the JSON to your own Amazon S3 bucket’s arn)

-

{ "Version": "2012-10-17", "Statement": [ { "Sid": "SecureFTPPolicyBucketAccess", "Effect": "Allow", "Action": "s3:ListBucket", "Resource": [ "arn:aws:s3:::secureftpfolder" ] }, { "Sid": "SecureFTPPolicyObjectAccess", "Effect": "Allow", "Action": "s3:*", "Resource": [ "arn:aws:s3:::secureftpfolder/*" ] } ] }4. Create an AWS IAM group called secureftp-users and attach the policy created above (secureftp-policy) to it.

- Create AWS IAM Users with Programmatic access and add them to the AWS IAM group secureftp-users. Note down the access key and secret access key for the user accounts as these will have to be provided to the users.

Thats all that needs to be configured on the AWS side. Simple isn’t it? Now lets move on to the client configuration.

Client Configuration

In this section, we will configure CloudBerry Explorer on a computer, using one of the usernames created above.

- On your computer, download CloudBerry Explorer for Amazon S3 from https://www.cloudberrylab.com/explorer/amazon-s3.aspx. Note down the access key that is provided during the download as this will be required when you install it.

- Open the downloaded file to install it, and choose the free version when you are provided a choice between the free version and the trial for the pro version.

- After installation has completed, open CloudBerry Explorer.

- Click on File from the top menu and then choose New Amazon S3 Account.

- Provide a meaningful name for the Display Name (you can set this to the username that will be used)

- Enter the Access key and Secret key for the user that was created for you in AWS.

- Ensure Use SSL is ticked and then click on Advanced and change the Primary region to the region where you created the Amazon S3 bucket.

- Click OK to close the Advanced screen and return to the previous screen.

- Click on Test Connection to verify that the entered settings are correct and that you can access the AWS Account using the the access key and secret access key.

- Once the settings have been verified, return to the main screen for CloudBerry Explorer. The main screen is divided into two panes, left and right. For our purposes, we will use the left-hand side pane to pick files in our local computer and the right-hand side pane to correspond to the Amazon S3 bucket.

- In the right-hand side pane, click on Source and from the drop down, select the name you gave the account that was created in step 4 above.

- Next, in the right-hand side pane, click on the green icon that corresponds to External bucket. In the window that comes up, for Bucket or path to folder/subfolder enter the name of the Amazon S3 bucket you had created in AWS (I had created secureftpfolder) and then click OK.

- You will now be returned to the main screen, and the Amazon S3 bucket will now be visible in the right-hand side pane. Double click on the Amazon S3 bucket name to open it. Viola! You have successfully created a connection to the Amazon S3 bucket.

- To copy files/folders from your local computer to the Amazon S3 bucket, select the file/folder in the left-hand pane and then drag and drop it to the right-hand pane.

- To copy files/folders from the Amazon S3 bucket to your local computer, drag and drop the files/folder from the right-hand pane to the appropriate folder in the left-hand pane.

So, tell me honestly, was that easy or what?

Just to ensure I have covered all bases (for now), here are few questions I would like to answer

A. Is the transfer of files between the local computer and Amazon S3 bucket secure?

Yes, it is secure. This is due to the Use SSL setting that we saw when configuring the account within CloudBerry Explorer.

B. Can I protect subfolders within the Amazon S3 bucket, so that different users have different access to the subfolders?

Yes, you can. You will have to modify the AWS IAM policy to do this.

C. Instead of a GUI client, can I access the Amazon S3 bucket via a script?

Yes, you can. You can download AWS tools to access the Amazon S3 bucket using the command line interface or PowerShell. AWS tools are available from https://aws.amazon.com/tools/

I hope the above comes in handy to anyone thinking of moving their secure ftp (or normal ftp) servers to a serverless architecture.

Hi Niv … I like the quote ….. “No server is easier to manage than no server”.