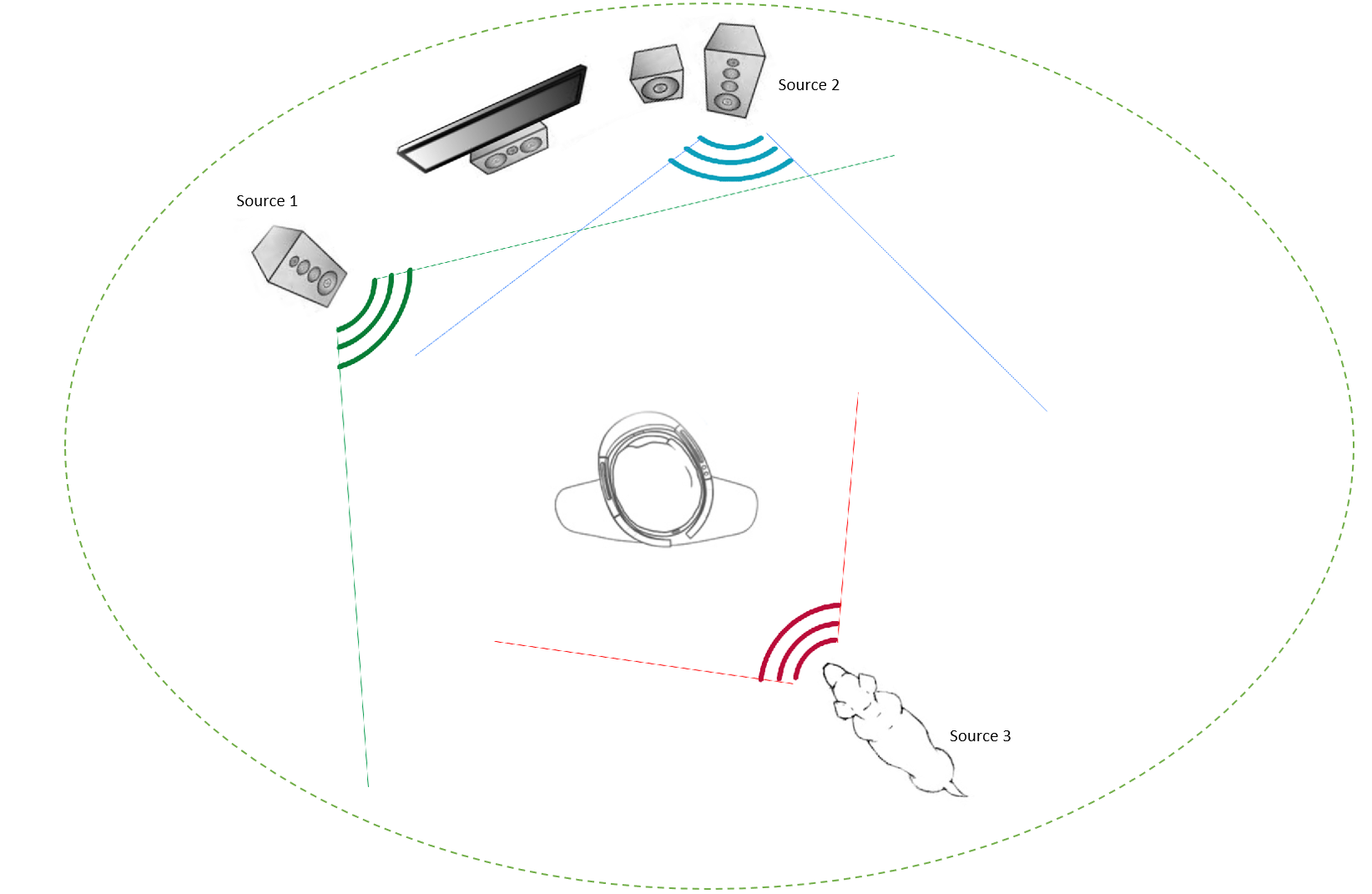

The world of Mixed reality and Augmented reality is only half real without three-dimensional sound effects to support the virtual world. Microsoft deals with this challenge by leveraging the ability of their audio engine to generate Spatial Sound. Spatial sound, as a feature, can simulate three-dimensional sounds in a virtual world based on direction, distance, and other environmental factors. Spatial Sound is based on the concept of sound localization which is a popular topic in the field of sound engineering. Sound localization can be defined as a process of determining the source of the sound, the field or sound, the position of the listener and the media or environment of sound propagation. The following diagram illustrates a virtual world with Spatial Sound enabled:

The concept of sound localization and Spatial Sound is not new to this world. Spatial music has been practiced since biblical ages in the form of the antiphon. The modern form of spatial music was introduced in early 1900 in Germany. Spatial sound empowers a Mixed reality application developer to place sound sources in a three-dimensional space around the user in a convincing manner. Objects in the virtual world can act as sources for these sounds creating an immersive experience for the user.

Scenarios for Spatial Sound

In the world of Mixed Reality, Spatial Sound can be used to make many user scenarios realistic. Following are few of them.

- Anchoring – The ability to position a virtual object in a virtual world is critical for many Mixed reality applications. In a scenario where the users turn his face away from the object, and it disappears from his viewport, the only way to give the user a scene of the object’s existence in the scene is by propagating localized sounds from the object.

- Guiding – Spatial sound are found to be very useful in scenarios where users need to be guided to draw their attention to a specific object or space in the three-dimensional world.

- Simulating physics – Sound plays an important role in emulating realistic physics in the world of Mixed Reality. For example, the impact of a glass sphere dropping behind the user in a three-dimensional world is best simulated by enabling localized shattering sound effects at the point of its collision with the floor.

Implementing Spatial Sound in HoloLens applications

The audio engine is HoloLens uses a technology called HTRF (Head Related Transfer Function) to simulate sounds that are coming from various directions and distances within a virtual world. Head Related Transfer Functions define directivity patterns of the human ears which caters for direction, elevation, distance, and frequency of sound. Unity offers built-in support for Microsoft HTRF extensions.

Configuring the Audio Source

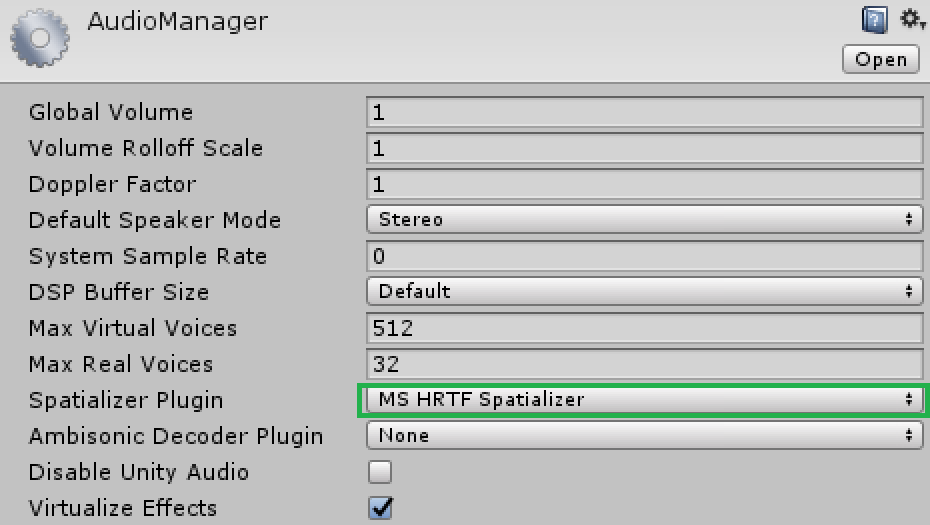

The plug-in can be enabled from the audio manager in Unity (Edit->Project Settings->Audio).

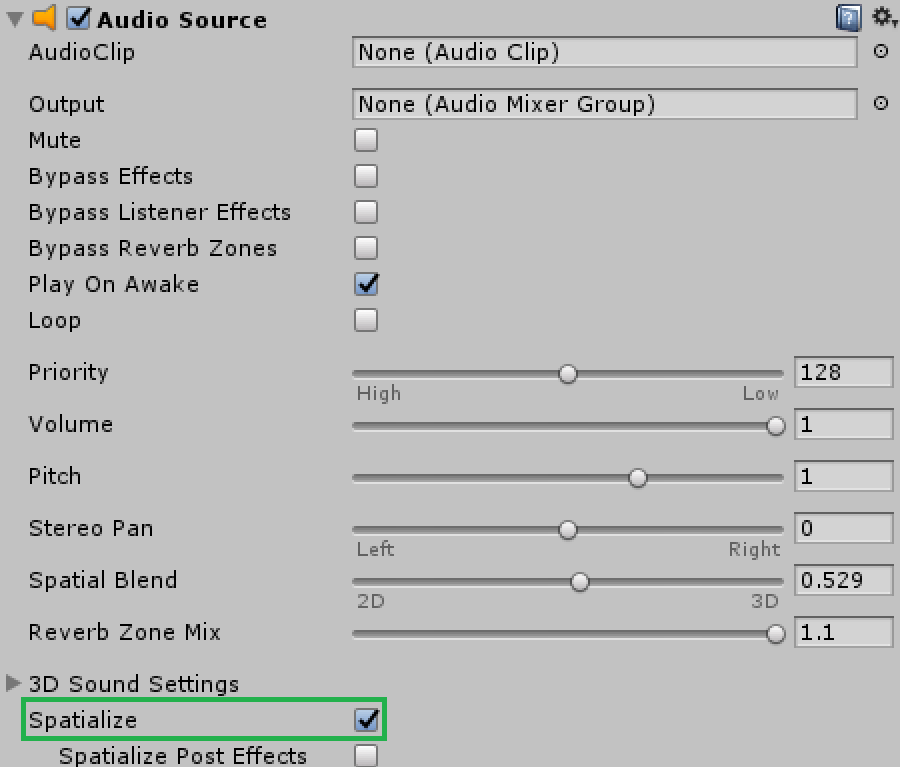

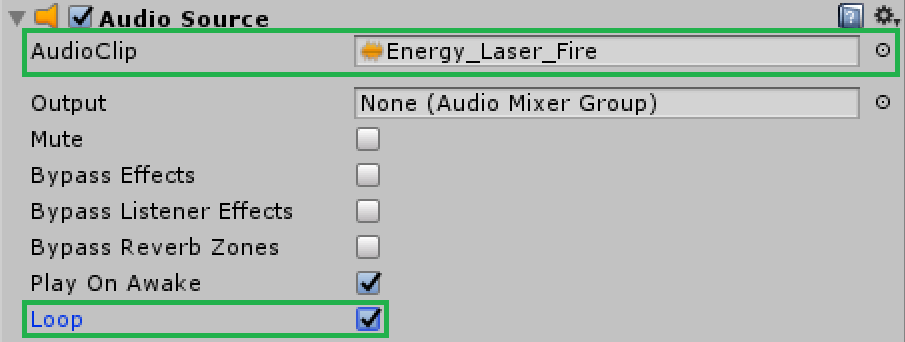

Once the setting is enabled, you should be able to ‘Spatialize’ any audio source attached to a game object in Unity. To configure the audio source, you will need to perform the following steps:

- Select the audio source on the game object in the inspector panel

- Check the ‘Spatialize’ Checkbox under the options for the audio source

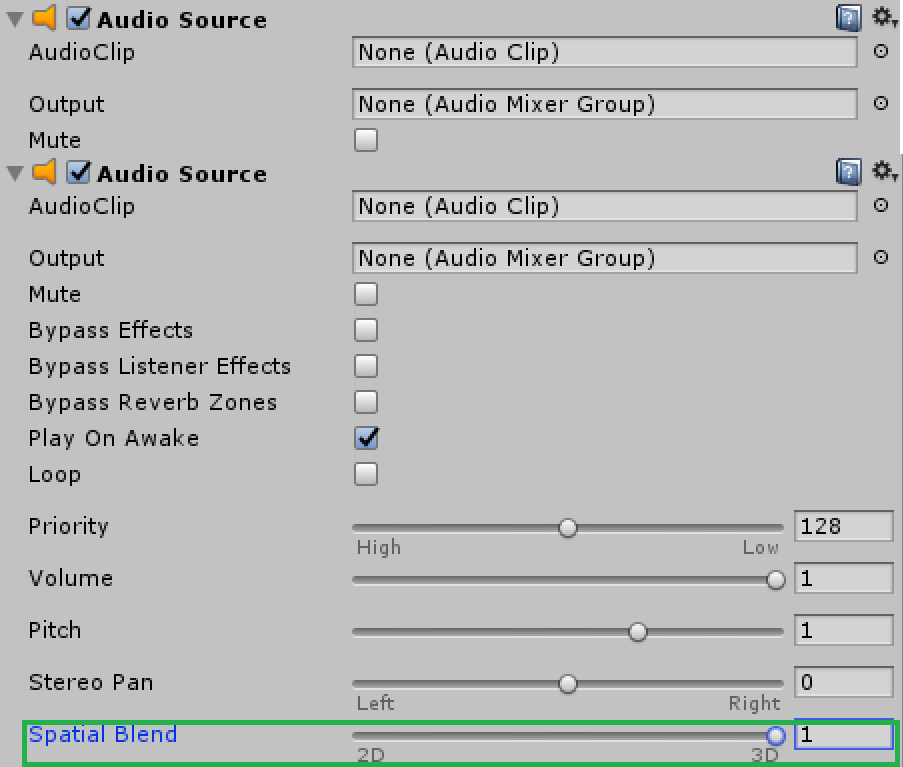

- For best results, change the value of ‘Spatial Blend’ to 1

Your audio source is now configured to play Spatial Sound.

Testing Spatial Sound

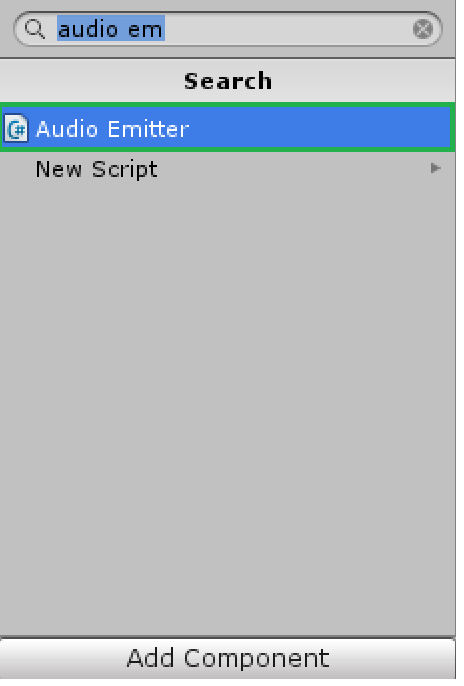

The best way to test Spatial Sound is by using the ‘Audio Emitter’ component which comes built-in with the HoloToolKit. Perform the following steps to configure the Audio Emitter.

- Add the Audio Emitter component from the inspector panel

- Configure the Update Interval, Max Objects, and Max Distance on the Audio Emitter component. The ‘Update Interval’ determines the frequency in which the Audio Emitter scans for environmental factors which influence the sound output. ‘Max Objects’ specifies the maximum number of influencing objects to be considered and the max distance specifies the maximum radius for the scan.

- Leave the ‘Outer Sphere’ parameter empty for now. This parameter is used to demonstrate audio occlusion

- Associate an audio file to your Audio Source and enable the loop

- Run the application and move around the object to feel the Spatial Sound in action.

Measuring sound output

It is often a scenario where you want to measure the sound output produced by an Audio Source to visually represent it. A good example is a case when you need to display an audio histogram within your application.

The following code can be used to measure the RMS output from an Audio Source which can then be used to paint the histogram spectrum.

Unity also supports a direct function (AudioSource.GetSpectrumData) to retrieve spectrum data from the audio source

To conclude, in this blog, we walked through the fundamentals of spatial sound. After which, we learned about implementing Spatial Sound in a HoloLens application and about how to measure sound output.