Hacking of commercial enterprises is never a good thing and in no way would I condone the dubious logic that was the basis behind the hack and release of the Ashley Madison database. There was no doubt a lot of personal and commercial damage caused.

But with every one of these hacks, there is opportunity. The hack has arguably done more for marriage fidelity than Fatal Attraction and has also given us a chance to relook at data security practices, personal data storage and password management practices. For me it’s a good chance to bust out one of this new tools in the BI suite Power BI on a good sized, real world dataset.

If you don’t want to run through the technical just scroll down to the results section below.

Power BI

Power BI comes in both a desktop and a hosted service model. I’m going to use the desktop model which is available here. It is a powerful BI tool which operates over PowerQuery, the data processing and transformation engine introduced in a previous blog. The tools are built for scale and so are not limited by those annoying little 1 million row limits of Excel. That’s a good thing because there is a lot of data in the Ashley Madison dataset.

Preparing the Data

The data can be downloaded from a number of places. I chose a torrent file sharing link with a set of zipped up files with an encryption key that matches the one that the hackers published to avoid tampering. The data comes as number of .dump files which are the tables from a mySql database, a set of .csv transaction files and a collection of other internal files. All up about 10GB of zipped up data to be explored.

The .dump files are mySQL dumps. These are a CREATE TABLE statement followed by lots and lots of INSERT statements. That’s great if you want to reconstruct the database (and if your prepared to wait a really long time to insert them all, but we don’t really need all the complexity of a relational database, flat files are just what we need. So I wrote a script to parse the INSERT statements and turn it back into a flat CSV file (with a little bit of finesse to get the right quote style for PowerQuery)

The daily transaction CSV folder is much easier, just trim off the titles and concatenate the contents into one great big CSV file. I’ve written a small c# script (attached) to concatenate the data and do a bit of cleaning along the way (it looks like the hackers, in their haste didn’t flush some files properly so there are a few truncated lines). Once the data is together it makes one great big 2.5GB AllTransactions.csv file with 10 million transactions!

The code is available here

Now, we get our first glimpse at the magnitude of the data available.

| File | Type | Size (GB) | Rows | Columns | Contents |

| amino_member.dump | mySQL dump | 12.7 | 36,399,119 | 61 | All member details |

| am_am_member.dump | mySQL dump | 9.5 | 31,971,589 | 48 | Some member details |

| member_details.dump | mySQL dump | 2.3 | 36,416,003 | 12 | Member details summary table |

| aminno_member_email.dump | mySQL dump | 1.7 | 36,397,897 | 8 | Member emails and notification they have signed up for |

| member_login.dump | mySQL dump | 4.2 | 36,400,530 | 5 | Every account, username and password hash |

| Transactions folder | Daily CSV | 2.4 | 9,692,318 | 19 | Every credit card transaction, who made it and where from (partial credit card details) |

Transactions

There has been a lot of discussion online about the actual validity of the database since it appears there is some irregularity about the makeup of population of users. There is a heavy bias of males to females (which might be expected) and the females appear to not be distributed properly, suggesting that he female population was actually manufactured by paid employees. To avoid that discussion, I’m going to look at the one part of the dataset that doesn’t lie. Money! The Ashley Madison dataset includes every credit card transaction since inception in a daily csv file.

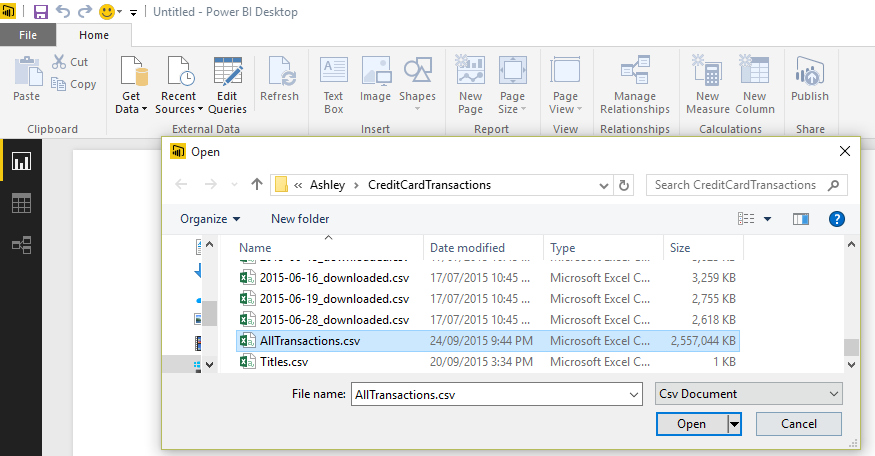

Start the Power BI application and launch the GetData wizard. This has a large number of different data source options but we are going with plain old .csv to load the AllTransactions.csv file.

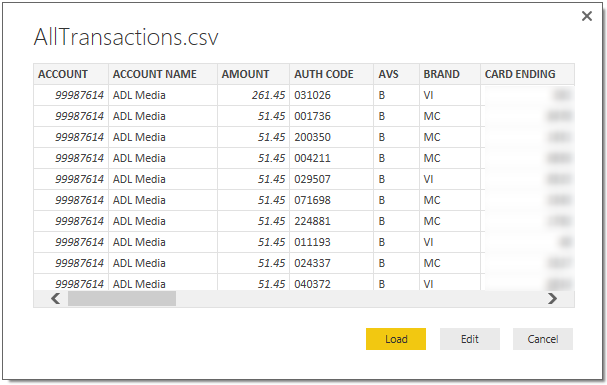

This starts the PowerQuery editor and gives the first look at the data. There’s plenty of juicy information in here but gladly the full credit card number is truncated!

So now is the opportunity to pre-process the data for analysis. Choose Edit and then in the PowerQuery editor use the Choose Columns button. First we’ll pick the interesting columns for analysis.

Since we are in development mode, and the data set is so large, it’s worth trimming the data set to keep it manageable. Use the “Keep Rows button” to keep just 100,000 rows then make a few changes.

PowerQuery is great for importing data from many sources and dealing with large data sets. The code development experience is pretty ordinary so I recommend using PowerQuery to trim up and filter out unnecessary data to make it as small as possible and leave the complex stuff for a later (see DAX below). Trim up the data like this:

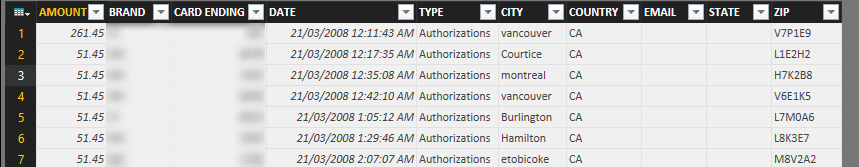

- Date column is actually a DateTime so rename it DateTime. Then use the Date button to add a “DateOnly” column, and a “Day”, “Month” and “Year” column.

- There are a number of failed attempts to charge against a credit card, these are identified by a not null value in the ERROR_CODE column. So trim out those rows and drop the column

-

The credit card transactions come in 4 types:

Authorizations – Submit credit card details to take up the free membership offer (Ignore these ones)

Settlements – Commit previous Authorization (Add these ones)

Purchases – Buy/Renew a membership outright (Add these ones)

Credits and Chargebacks – The wife found out, so quickly back out! (Subtract these ones) - Use the “Add Custom Column” to add a new column called “Value” which represents the actual value of the transaction using an if statement to add just the Settlements and Purchases and subtracting the refunds.

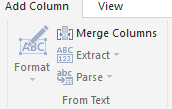

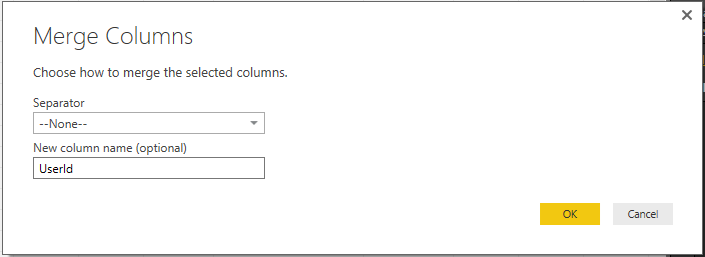

- Add a UserId column that will help identifying unique users. There’s plenty of personally identifiable information which can be combined to form an effective “UserId”. I have used these FIRST NAME, CARD ENDING and ZIP columns which will give a reasonable approximation for a unique User Id so choose “No Separator” and name the column UserId. Select the columns and hit the Merge Column button.

- Finally remove all unused columns including all that nasty personal and credit card information from the data set so we can safely publish.

The Advanced Query button shows the Power Query code that was generated by the import wizard and transformation steps

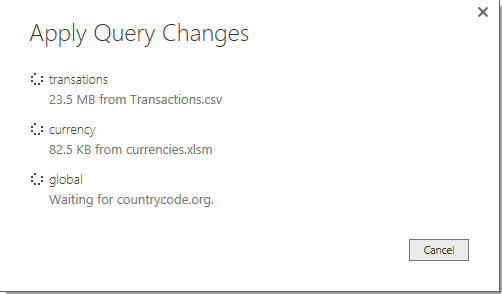

Now “Close and Load” the data into Power BI.

Currency

One of the nice challenges in the data set is the Amount column is recorded in the native currency of the credit card. So if we want to do any reporting across different countries then that number needs to be normalised to a single currency such as the $US. This is easy if we use the Excel spreadsheet created in my previous blog here Use Excel, PowerQuery and Yahoo Finance to manage your Portfolio. Download the Excel workbook from that blog and open it.

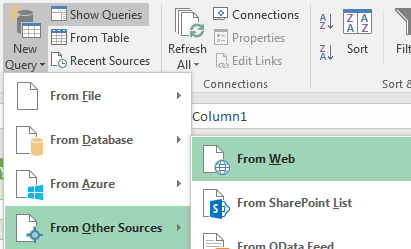

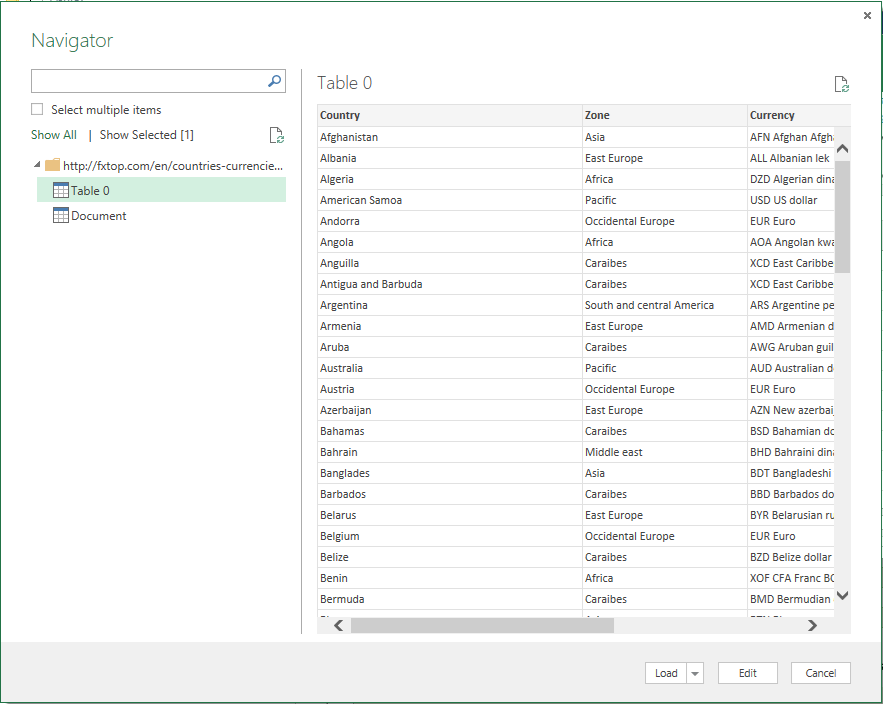

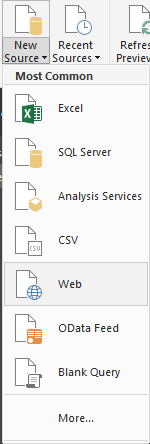

First we need a data source that links CountryCode to CurrencyCode, theres plenty available. I chose this one http://fxtop.com/en/countries-currencies.php . One of the really powerful features of PowerQuery is the ability to directly parse a web page into tables which can be imported into a PowerQuery table using the “From Web” data source.

Open the http://fxtop.com/en/countries-currencies.php page.

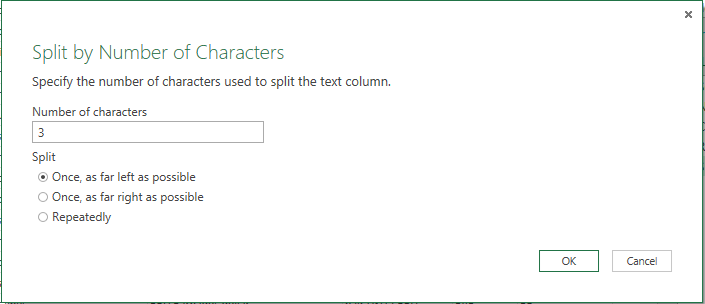

Now using PowerQuery tool bar rename the Country column to CountryCode and Split the Currency column by the first 3 characters

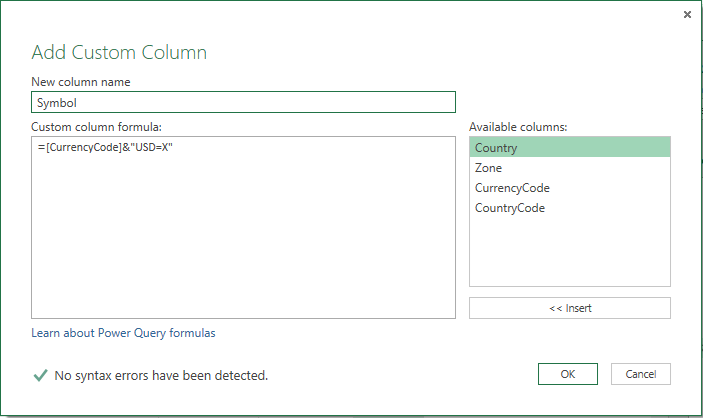

Drop all the other columns we don’t need and finally, to retrieve the Rate from yahoo you need the currency in the form of AFNUSD=X so create a new Custom Column called Symbol.

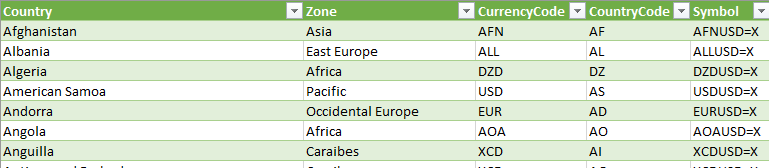

Which generates this table

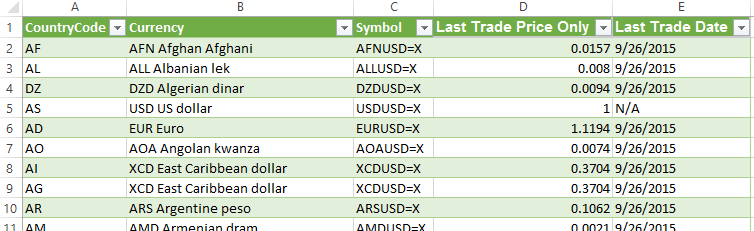

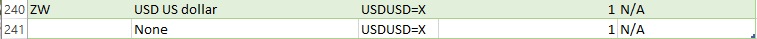

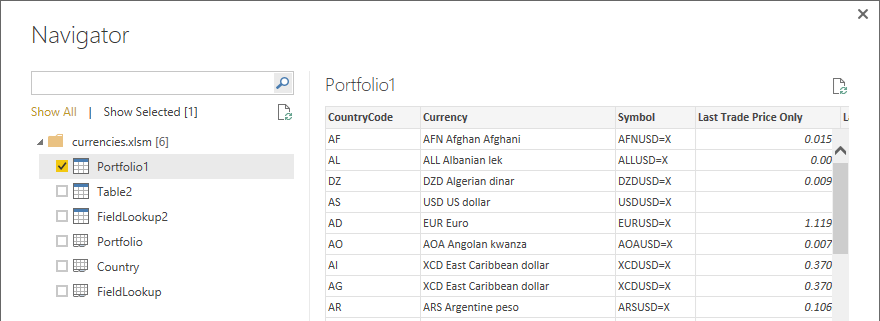

Paste the Symbols into the Symbol column of the Portfolio tab then hit Refresh Data and you’ll end up with a table like this with the latest currency rates populated from Yahoo Finance in the “Last Trade Price Only” column.

Add one extra row in the table to handle those records with no country set and assume they are US dollars

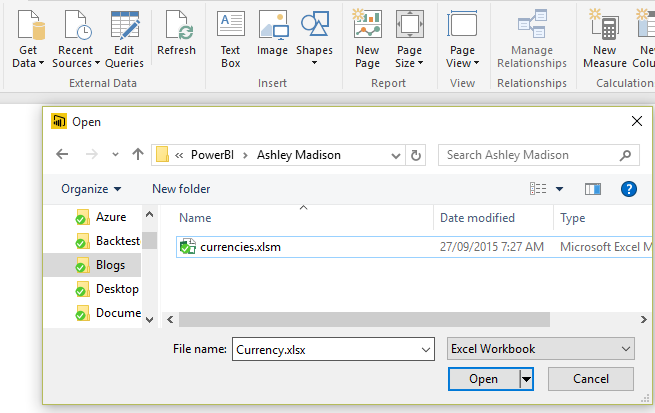

Save that as currencies.xlsm and then back to Power Query to use the Get Data -> Excel to import it

And import the Portfolio table from the Excel workbook.

Remove the columns other than CountryCode and Rate.

Now we will be using this table to do a significant large join to bring currency rate data onto the transaction table. To do this efficiently we can help the join performance by providing a primary key on the table. There is no GUI wizard feature to do this so open the Advanced Editor and add this line to set a Primary key on the CountryCode column to be used in the join engine.

#"Indexed Table" = Table.AddKey( #"Renamed Columns", {"CountryCode"}, true)

Here’s the currency query:

Global Data

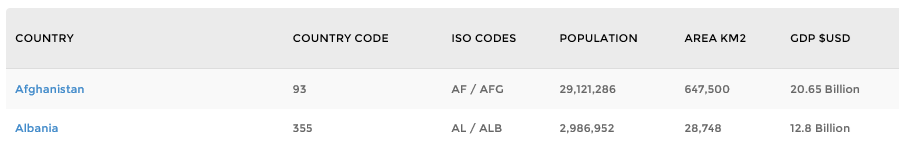

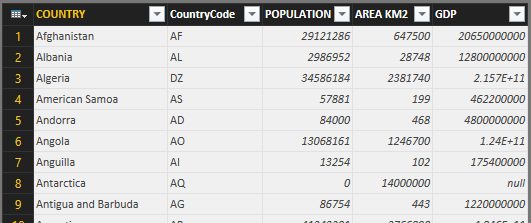

Given that the site was a global phenomenon, we want to get some global perspective on the data so let’s upload some global reference data from here https://countrycode.org/ which has some useful country stats.

Import the page using New Source->Web feature in Power Query.

Use the PowerQuery GUI to

- Rename the GDP column to and Split it on the first space ‘ ‘

-

The create a new Multiplier column by parsing the Million and Billion words into numbers using an if statement

#”Added Custom” = Table.AddColumn(#”Changed Type1″, “Multipler”, each

if [GDP.2]=”Million” then 1000000 else

if [GDP.2]=”Billion” then 1000000000 else

if [GDP.2]=”Trillion” then 1000000000000 else 1), - Split the ISO CODES column by the first ‘/’ and rename the first column CountryCode

- Remove all the unnecessary columns

- And add an Index on CountryCode for the join performance

The table should look like this

Here’s the generated global query:

https://gist.github.com/petermreid/23e64049b0aa270a1240

Use Close and Apply to run all the queries

Relationships

At this point the data sets are all completely independent. On the left hand side of the Power BI Desktop is a button to switch to Relationship mode.

Power BI will do a reasonable job of guessing the relationships but may need a little help. If not already there, create a one-to-many relationship with the transaction table by CountryCode by dragging and dropping the CountryCode column from the “many” fact table to the “one” dimension tables. (The use of the multidimensional terms used in SQL Server Analysis Services is no mistake as we will see later)

Data Typing

Now switch into Report mode

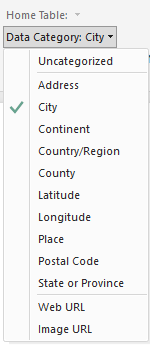

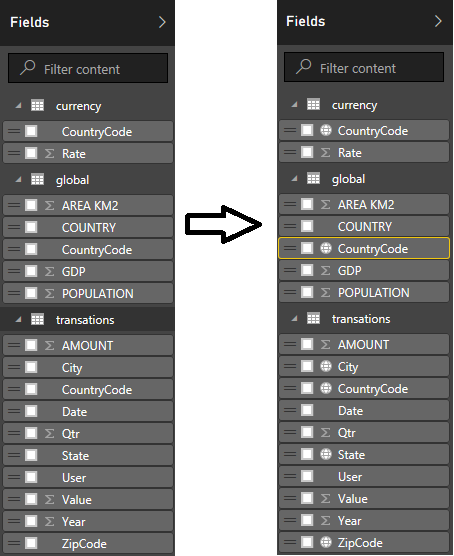

There’s a bit of additional data type coercion that needs to happen to help Power BI make the right choices when presenting the data. For each of the geographical entities use the Data Category menu to assign the correct type of geographical data.

This will put a small globe icon next to each and will be useful in the mapping process.

Do the same for the currency fields setting to Data Type currency.

DAX

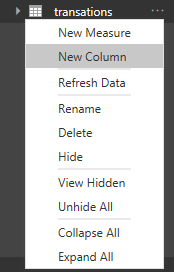

Now it’s time to generate some new columns of data that join across related tables. This is the first opportunity to use DAX (or Data Analysis eXpressions) which is a real pleasure. This is the query language under PowerPivot and DAX has great debugging, helpful error handling and intellisense. On the transactions table use New Column

to add the following columns

Quarter = "Q" & transactions[Qtr] -- the year quarter stringValue US = transactions[Value]*RELATED('currency'[Rate]) -- the value in $US

(Note the use of the ‘RELATED’ keyword to traverse the join relationship to the currency table)

Value US per Capita(M) = IFERROR(transactions[Value US]/RELATED('global'[POPULATION])*1000000,0) -- the value in $US per million people(Note how the variables don’t have to follow normal syntax rule, spaces and punctuation are fine)

Value US per GDP(M) = IFERROR(transactions[Value US]/RELATED('global'[GDP])*1000000,0) --the value in $US per million GDP

Report

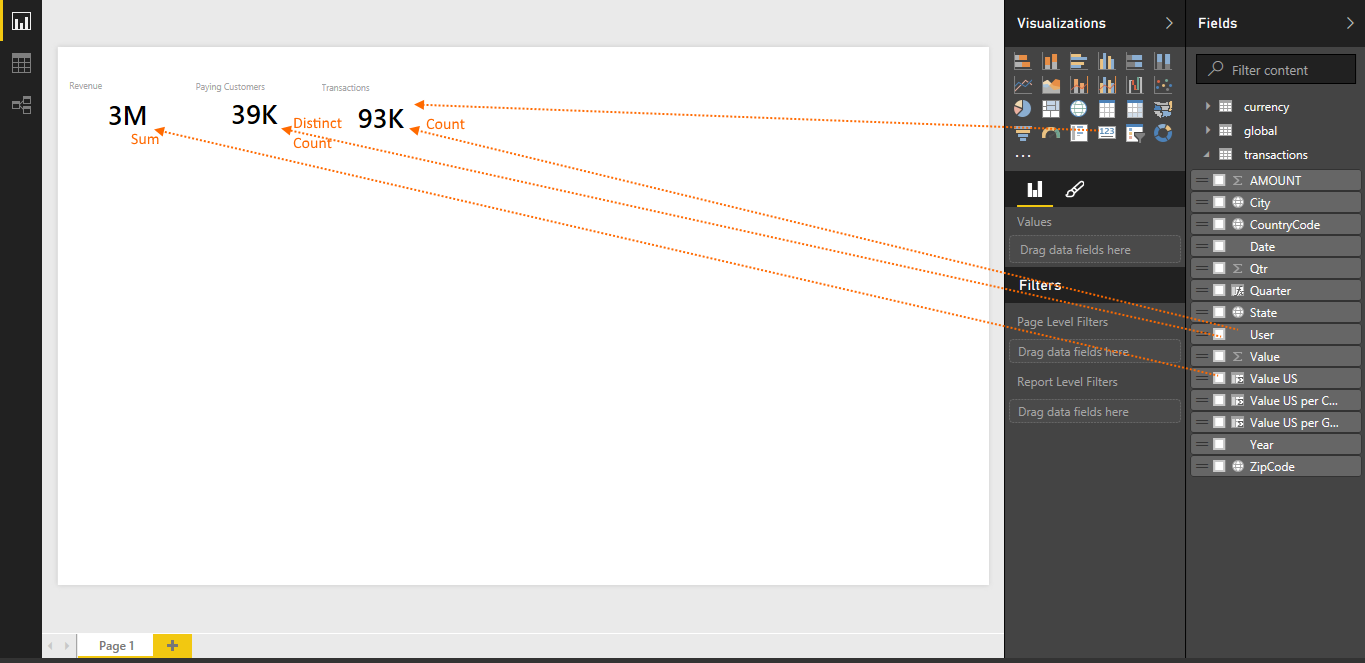

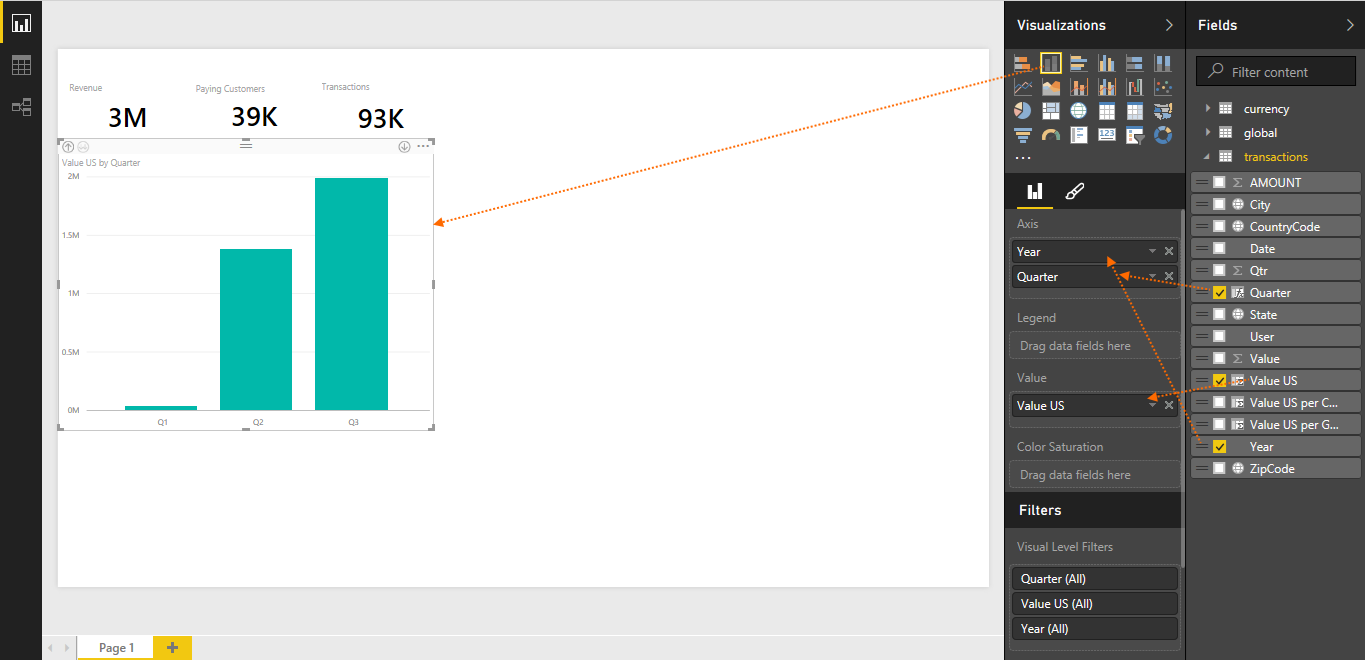

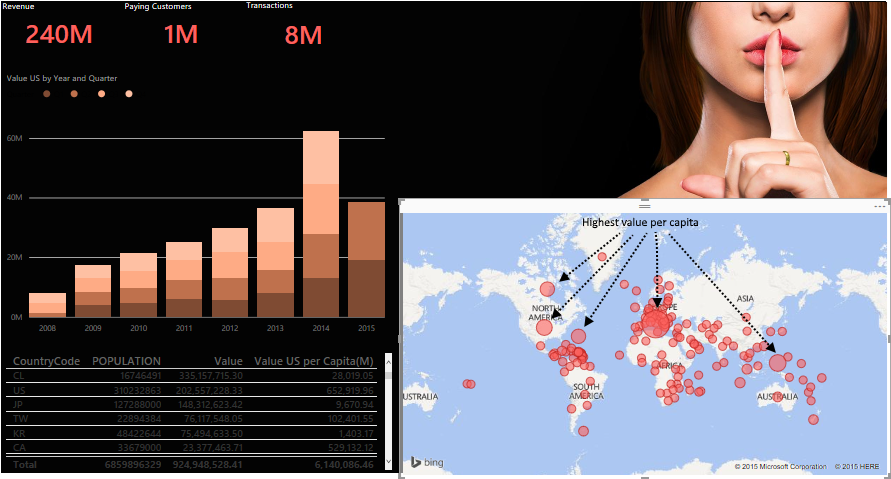

Now the fun part, build a report! I did this

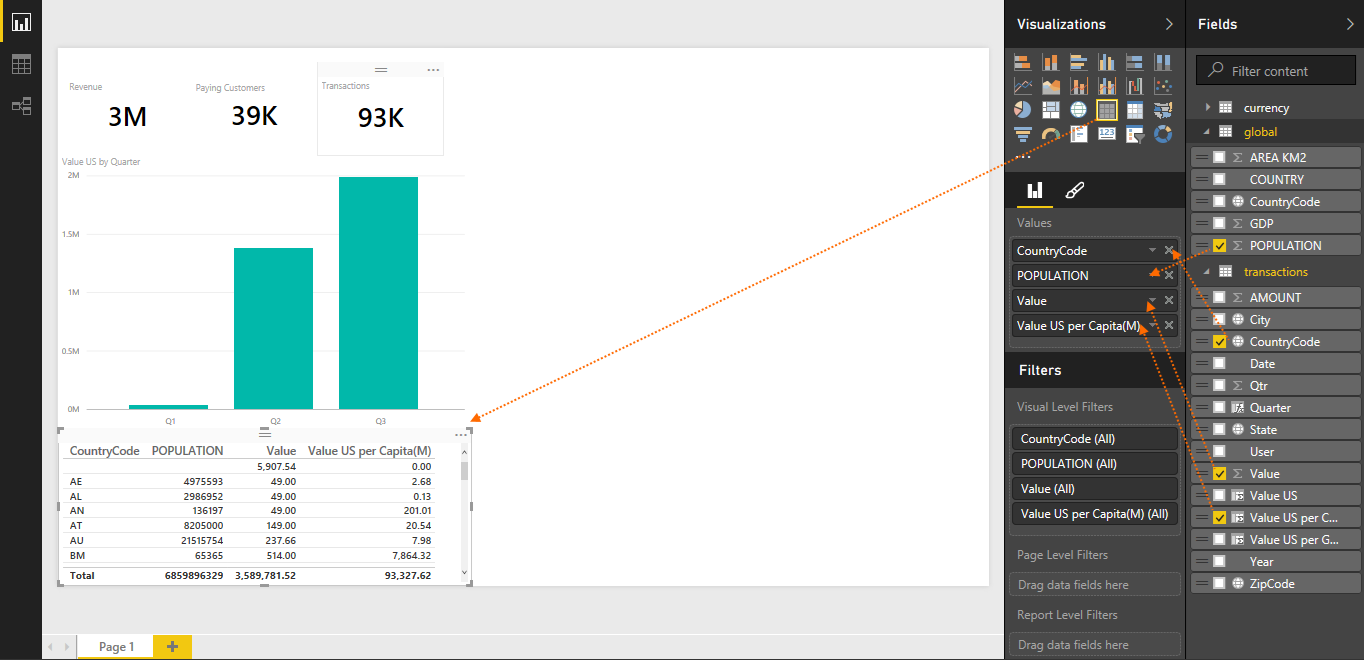

And this

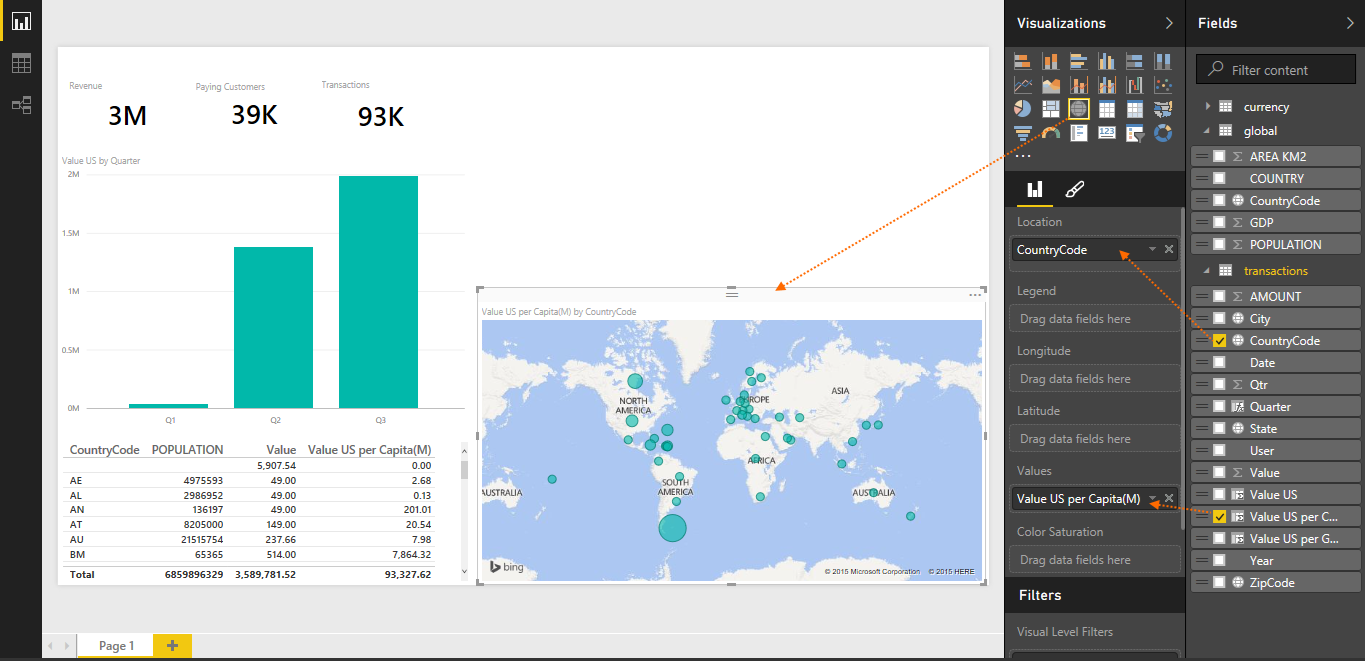

And this

And this

Now we can start to really see the data. Don’t forget the transactions query is limited to 100,000 rows which is not even all of the first year of operation. Ashley Madison, by any measure was already a success having brought in $US3 million in revenue, with 39,000 paying customers!

Scaling Up

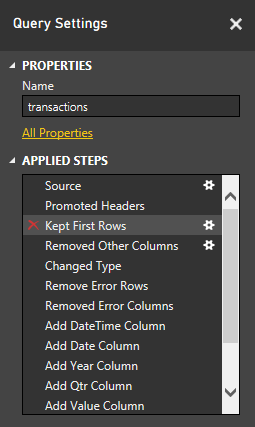

Once happy with the result, open up the transactions query

and remove the Kept First Rows step so the full data set is processed.

Now Close and Load the Power Query editor and go make a cup of tea. The full data set is over 2GB and 10 million rows so it’s going to take a while. My i7 8GB SSD laptop takes 20 minutes to process the data.

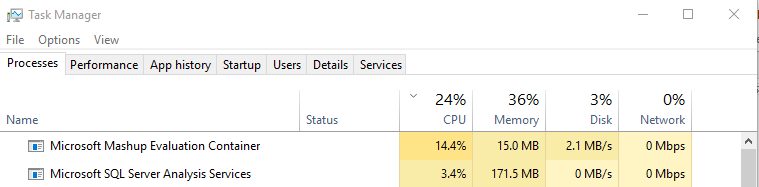

While that is working take a look at the Task Manager. The Microsoft Mashup Evaluation container is Power Query engine doing its work. But interestingly under the Power BI hood is SQL Server Analysis Services! What we are doing while using the Relationship editor and the Report builder is creating a “cube” which is then embedded in the Power BI .pbix file.

Results

Now with all the data we see the real success story that is Ashley Madison

- $US240M in total revenue and on target to hit $US100M in 2015 alone (the hack only captured up to Q2 2015)

- 1M paying customers and climbing rapidly

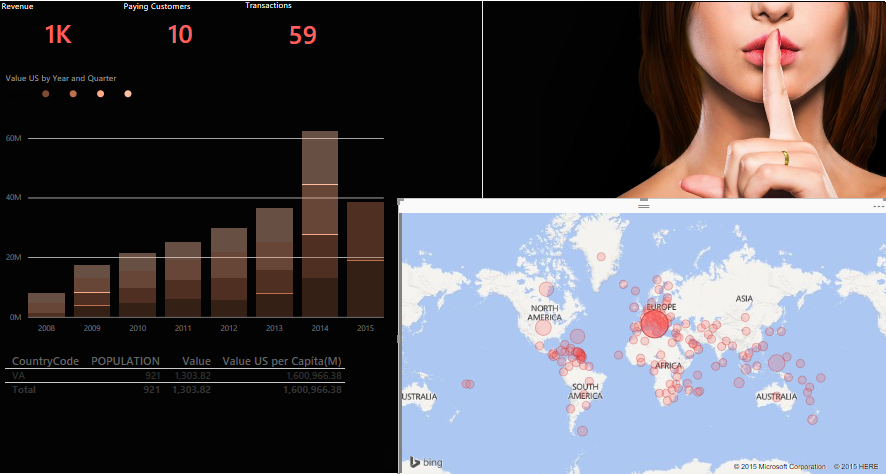

So the burning question which country spent the most on cheating per capita? It’s as simple as clicking on the largest red circle or clicking on (sorting by) Value US per Capita in the table.

The Vatican! It has 10 paying customers with 59 separate transactions totalling US$1303. We can even see that the initial transactions occurred in 2009, then a period of inactivity and then a return to paid up member status in 2013, 14, and 15. It’s a little bit unfair to label the Vatican since the population is so small it could be a statistical error, but given 1% of residents were paid up members, there’s certainly something interesting there. The other interesting cases are Palau, Falkland Islands and Bermuda.

Publishing

Publishing a report is as easy as pressing this button

The report is publised to here. It isn’t a static set of graphs and figures, it is the full cube which means all of the richness of the Power BI Desktop is available including filtering and drill downs on each of the report elements. Clicking on a circle in the map filters all other elements on the page with that category.

However, the published report is only available to licensed users.

Summary

This is some very powerful tooling at the early stages of development. Power Query needs some work but DAX is great so use Power Query to reduce the data down and use DAX to do the more complex stuff.

The Power BI Desktop Report Builder is primitive but getting better and there is a release every every month. It’s lacking some of the things you’d expect like colour control and font size control on text, z-order control on report elements and snap to grid layout control, theres some room for improvement before the tool is ready to take on the big players.

But thats just the thing Power BI isn’t a multi million dollar BI platform, its a desktop editing and SaaS publishing platform for BI, much like the way I use Word to create this blog which is then published to the Internet. Its another example of cloud computing at its best. A very capable tool once only available to enterprises put in the hands of everyone. This is a disruption for over priced software vendors and consultancies in the business intelligence space.

If you want to have a go at doing some more data analysis I’ve saved the anonymised Power BI files (with no personal information in it) here. The ‘transactions’ data set is the one used in this blog, the ‘members’ dataset has a much richer set of demographics information including sex, preferences, height, weight, dob, date joined etc. I’ll leave it to the reader (or the next blog) to find some interesting insights in there.

Great work Peter. Great way to show the rich feature set of Power BI and using an interesting dataset 😉

Thanks for sharing this Peter. I had requested one feature from the Power BI team to allow embedding Visuals into custom applications and received an update from their end about the Tiles API

http://blogs.msdn.com/b/powerbidev/archive/2015/09/23/power-bi-api-updates-roundup.aspx

This makes Power BI a great choice

Also, the fact that you can build custom visualizations and share with others multiplies the power of power bi 🙂

Yes its a very response product team. Ill be adding some things to their todo list

Nice article! I would like to add that if it wasn’t done for the demo purposes I would upload the data to sql server. Power BI has SQL Server adapter and can translate M Queries to t-sql and potentially could use the newly introduced “Direct Queries” (preview). Unfortunately, not all query syntax can be translated so you have to be “careful”. In any case, in sql server you could add indices or additional views to smooth the data querying experience.