Building smart applications which can work in a three-dimensional space has many challenges. Amongst these, the one that tops the list is the challenge of understanding and mapping the surrounding 3D world. Applications usually depend on the device and platform capability to resolve this problem. Augmented Reality and Mixed Reality devices ships with built-in technologies to measure the depth of the containing world.

Scenarios of interest

Mapping the world around a device is critical to enable powerful scenarios in this field. Following are few such use cases:

- Docking/Placement – What makes Mixed Reality different from Augmented Reality is its ability to enable interaction between virtual and physical objects. To make a Mixed Reality scenario realistic, it is critical for the application to understand the mapping of the environment where the user is currently operating from. This will help the application place or dock the holograms obeying the physical bounds of the environment. For example, if the application needs to place a chair in a room, it will need to position it on the floor with enough space to land its four legs.

- Navigation – Objects in the holographic world should be constrained by the rules of the physical world to make the application look real. For example, a holographic puppy should be able to walk on the floor or jump on to the couch and not walk through the walls and furniture. To enable this, the application should be aware of the depth of each object around the user at any given point in time.

- Physics – In the real world, the behaviour of an object in motion is highly influenced by factors like inertia, density, elasticity, and so on. To match the similar experience of holograms in the world of Mixed Reality, the application will need to be aware of the environment. For Example, dropping the ball from the roof on the floor will have a different effect from dropping it on a couch.

Technologies

Depth sensing is not a new problem in the world of computing. However, the rise of Mixed Reality and Augmented Reality enabled devices have taken it to the limelight. Different vendors address this challenge by different names. For examples, Google calls it ‘Depth Perception’. Apple ARKit calls it ‘Depth Map’ and Microsoft calls it ‘Spatial Mapping’. The underlying technologies used by these devices may be different but the objective of discovering the depth of the environment around the device remains the same. Following are few of the underlying technologies used by these devices to measure depth:

- Structured Infrared light projector/scanner

- RGB Depth cameras

- Time-of-flight camera

Time of Flight

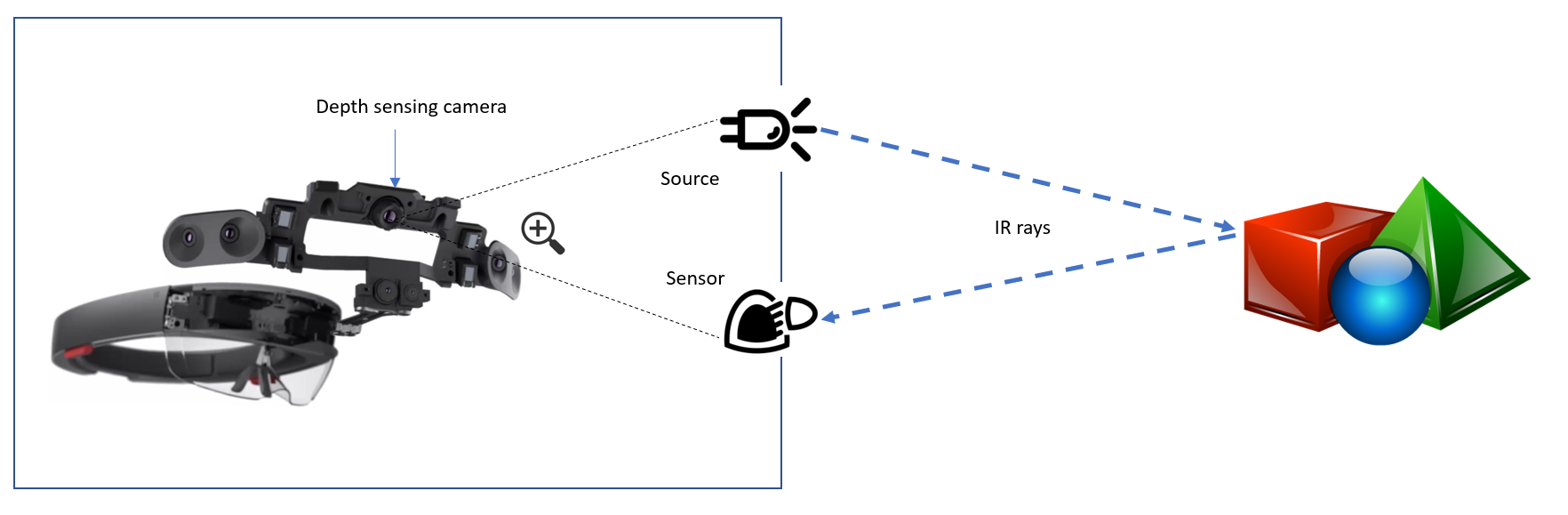

Time of flight technology is specifically of our interest because of its popularity and the fact that it is being used by devices like Microsoft Kinect and HoloLense to measure depth. The technology works on the reflective properties of the objects. It uses the known speed of light to calculate the distance by measuring the time taken for a photon to reflect back to the device sensors. The following diagram illustrates the measurement process:

Experiments state that the time for flight technology works at its best in between the range of approximately 0.5 to 5 metres. The depth camera in HoloLens works well between 0.85 to 3.01 metres.

Spatial Mapping

Spatial Mapping is a feature shipped with Microsoft HoloLens which provides a representation of real-world surfaces around the device. This can be used by application developers to make the applications environment aware. The feature abstracts the hardware technology used to measure the depth and exposes easily consumable APIs to integrate with.

Spatial Mapping in HoloLens

The best way to leverage Spatial Mapping capabilities in a HoloLens is to integrate it using Unity. To enable Spatial Mapping, you will first need to enable ‘SpatialPerception’ capability on your project. Unity offers two built-in components to support Spatial Mapping.

- Spatial Mapping Renderer – This component is responsible for visually presenting the spatial map as a mesh to the application.

- Spatial Mapping Collider – The collider is responsible for enabling interactions between the holograms and the spatial mesh.

These components can be added to an existing unity project from the ‘Add Component’ menu (Add Component > AR > Spatial Mapping Collider/Spatial Mapping Renderer)

The following link talks about setting up Spatial Mapping capabilities in your Unity project in detail.

https://developer.microsoft.com/en-us/windows/mixed-reality/spatial_mapping_in_unity

Tips and tricks

Following are few tips and tricks to optimize Spatial Mapping in your applications.

- Updating spatial maps – Running a spatial map starts with the trigger for collecting mapping data. This operation is very CPU intensive thereby costing battery life and starvation to other processes. To optimise update cycles, request collision data only when required. The API’s also let you query collision data for selective surfaces.

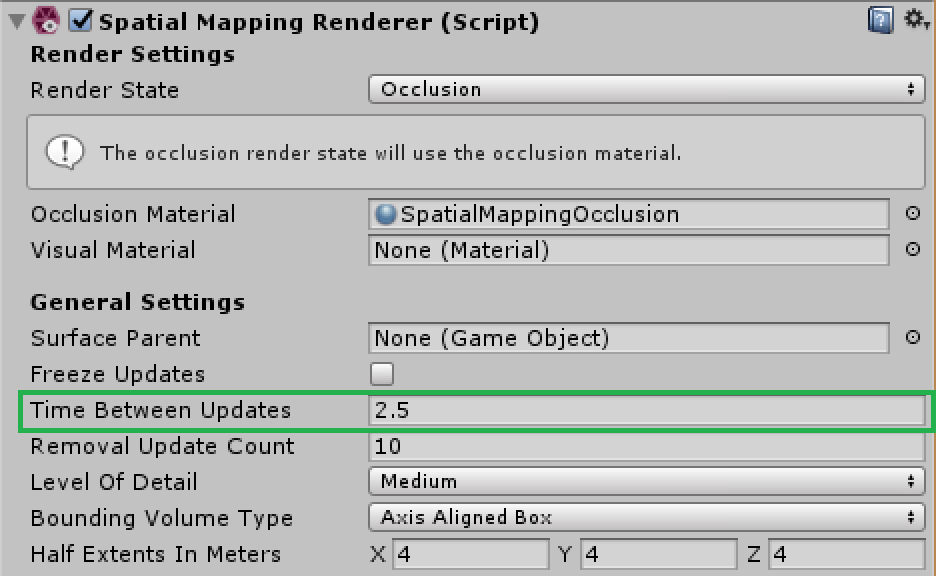

- Configuring refresh intervals – It is important to choose an optimal refresh rate for your spatial maps to go light on CPU. You can do this from the Unity’s inspector window.

- The density of spatial data – Spatial Mapping uses triangle meshes to represent the surfaces it maps. For an application which does not require high-resolution mapping, it is advisable to generate maps with lower triangle density to optimise CPU time and turn around time for the mapping process.

- Understanding the implementation – It is useful to understand the implementation of Spatial Mapping to perform low-level optimizations specific to your applications. Understanding how ‘SurfaceObserver’ and ‘SurfaceData’ is implemented gives a good insight into how things work. You can refer to the Unity documentation to learn more about this.

https://docs.unity3d.com/Manual/windowsholographic-sm-lowlevelapi.html

- Mixed Reality Toolkit example – A good place to start on Spatial Mapping is the example shared within the Mixed Reality Toolkit accessible through the following link.

To conclude, in this blog, we briefly discussed the depth mapping problem, solutions to this problem and the technology behind it. We also dived deeper into Spatial Mapping feature of HoloLens and how it can be used with a Unity project.